With Ansible you can configure (aka provision) remote machines by using a simple text file. It uses so-called Playbooks to execute a set of commands on the remote machine. So instead of having a dedicated person executing a set of commands on each machine, with Ansible, this configuration can be collaboratively worked-on, reviewed, versioned and reused. You do not need to update a documentation for setting up your environment elsewhere, as the Playbook is the documentation.

There are other configuration-tools than Ansible, such as Chef or Puppet. Ansible does not require an agent running on the machines it configures. It only needs an ssh-access to the remote machines. In addition, the remote-machines need to have Python running.

Prerequisites

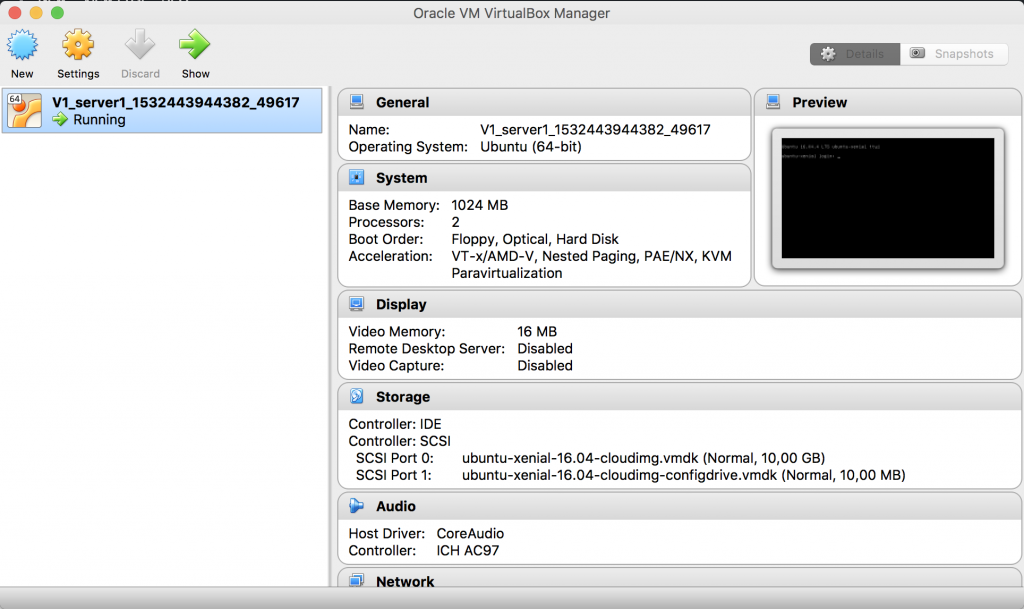

In this tutorial, we will configure one of the two machines, we set-up in the previous tutorial. In the tutorial, we copied the public keys of our local machine to the authorized_keys of the remote machines. Hence our local machine is allowed to access the remote machines using ssh – in my case

ssh vagrant@127.0.0.1 -p2200

ssh vagrant@127.0.0.1 -p2222

(or any other user-name and IP-address and port to establish an ssh-connection to your machines)

Next, install Ansible on your machine (please consult the Installation Guide). I installed Ansible globally using

sudo python get-pip.py

sudo pip install ansible

brew install python

Subsequently, introduce the remote machines to ansible to adding them to /etc/ansible/hosts (aka Inventory in Ansible terminology). If you can’t find your inventory, please have a look here.

web1 ansible_host=127.0.0.1 ansible_user=vagrant ansible_port=2200 web2 ansible_host=127.0.0.1 ansible_user=vagrant ansible_port=2222

You can also use the YAML-notation:

all:

hosts:

web1:

ansible_host: 127.0.0.1

ansible_port: 2200

ansible_user: vagrant

web2:

ansible_host: 127.0.0.1

ansible_port: 2222

ansible_user: vagrant

I had some problems when tabs and spaces for indention are mixed in the configuration. I used spaces instead and it works fine. Please refer to the Ansible Documentation for more details on the Inventory.

To see if everything works correctly let us ping the remote machines using Ansible

ansible all -m ping or ansible all -m ping -u [user-name]

If everything runs correctly, you should see something like

web2 | SUCCESS => {

"changed": false,

"ping": "pong"

}

web1 | SUCCESS => {

"changed": false,

"ping": "pong"

}

You can now execute commands on the remote machine, e.g.

ansible all -a "/bin/echo hello”

As a result, you should see something like

web2 | SUCCESS | rc=0 >> hello web1 | SUCCESS | rc=0 >> hello

Playbooks

As mentioned above, Ansible Playbooks are simple text-files in a YAML-syntax. A Playbook contains how a remote-machine should be configured (provisioned).

- A Playbook consists of one or more Plays.

- Each Play contains a set of Tasks that are executed on the remote machine.

- Each task calls an Ansible Module (command). There are simple modules (tasks) to copy files from one location to another or to install software on the remote machine etc. I will focus on a simple set of commands to configure a Jenkins on one remote machine and … on the others. For more on tasks (modules) please refer to the Ansible documentation.

You can easily set-up Jenkins using predefined Ansible Roles. I will come to this later. Here, I want to explain the basic structure of a Playbook (jenkins.yml). I will go to the different parts of the example in the next chapters.

- name: Install Jenkins

hosts: web1, web2

gather_facts: true

become: yes

become_method: sudo

vars:

jenkins_port: 8080

tasks:

- name: Populate Service facts

service_facts:

- debug:

# var: ansible_facts.services

- name: Stop Jenkins Service

service:

name: jenkins

state: stopped

when: "'jenkins' in ansible_facts.services"

- name: Clean-Up previous installation

package:

name: '{{ item }}'

state: absent

loop:

- jenkins

- openjdk-8-jdk

- git

- wget

- name: Install dependencies

package:

name: '{{ item }}'

state: latest

loop:

- openjdk-8-jdk

- git

- wget

- name: Download jenkins repo

apt_repository:

repo: deb https://pkg.jenkins.io/debian-stable binary/

state: present

- name: Import Jenkins CI key

apt_key:

url: https://pkg.jenkins.io/debian/jenkins-ci.org.key

- name: Update apt packages

apt:

upgrade: yes

update_cache: yes

- name: Install Jenkins

apt:

name: jenkins

state: present

allow_unauthenticated: yes

- name: Allow port {{jenkins_port}}

shell: iptables -I INPUT -p tcp --dport {{jenkins_port}} -m state --state NEW,ESTABLISHED -j ACCEPT

- name: Start the server

service:

name: jenkins

state: started

- name: Waiting for Service to start

wait_for:

port: 8080

Basic Configuration

- name: Install Jenkins software hosts: web1, web2

All Plays in Ansible can start with a minus (–), often followed by a name-parameter (optional) or an Ansible Module (command). The name-parameter shows in the log which play is currently executed. So I recommend it for debugging, but also for documentation.

The hosts-parameter defines on which hosts in Inventory the Playbook should be executed (in this case web1). You can also add the remote-user that runs the modules in the playbook be specifying the remote_user-parameter (e.g. remote_user: root).

You can execute the whole playbook or specific commands (like the ones below) as a specific user. In this case use become_method or become_user. In this example, we will install Jenkins as superuser.

become: yes become_method: sudo

The next part of the Playbook are the tasks that will be executed on the remote machines.

Installation of prerequisite packages

To run Jenkins with the desired configuration, we need Java 8 and git on the machine, as well as wget to load the Jenkins packages. We will use the Ansible module Package as a generic package manager. In addition, I will introduce the concept of standard loops.

- name: Install dependencies

package:

name: '{{ item }}'

state: latest

loop:

- openjdk-8-jdk

- git

- wget

The Package module uses two mandatory parameters

- the name defines the name or the name and version of the package

- the state defines if the packages should be installed or removed or the state of the underlying package module (here apt)

The parameter name gets the value '{{ item }}'. The curly braces tell Ansible that is get the values from the next loop block. Here we will put the names of the packages to install. For more on loops, please consult the documentation.

Installation of Jenkins

The basic package manager we used above, won’t offer enough flexibility. Hence, as we will be installing Jenkins on a Debian environment, we will use the Module for the Debian package manager APT (Advanced Package Tool).

The package information for Jenkins can be found in the corresponding Debian Repository for Jenkins.

- name: Download jenkins repo

apt_repository:

repo: deb https://pkg.jenkins.io/debian-stable binary/

state: present

The Ansible Module apt_repository adds an APT repository in Debian. We add the latest stable Debian Repository for Jenkins.

- name: Import Jenkins CI key

apt_key:

url: https://pkg.jenkins.io/debian/jenkins-ci.org.key

To be able to use the repository, we need to add the corresponding key. We use the Ansible Module apt_key.

- name: Update apt packages

apt:

upgrade: yes

update_cache: yes

Before you install Jenkins, you might want to update the package lists (for upgrades or new packages).

- name: Install Jenkins

apt:

name: jenkins

state: present

Now we can finally download and install the Debian Jenkins package using the Ansible Module apt.

Configuration the Network

By default, the Jenkins web interface listens on port 8080. This port has to be accessible from outside the Virtual Machine. Hence, we add a new rule to our iptables by executing a simple shell command.

- name: Allow port 8080 shell: iptables -I INPUT -p tcp --dport 8080 -m state --state NEW,ESTABLISHED -j ACCEPT

Starting the Service

Finally, we need to start the Jenkins service. Here we can simply use the generic Ansible Module service.

- name: Start the Service

service:

name: jenkins

state: started

Waiting for the Service to run

The Ansible Module wait_for waits for a condition to continue with the Playbook. In this simple case, we wait for the port to become available.

- name: Waiting for Jenkins to become available

wait_for:

port: 8080

Idempotency

Playbooks should be idempotent, i.e. each execution of a Playbook should have the same effect, no matter how often it is executed. Ansible (of course) if not able to verify idempotency, hence you are responsible, e.g. for checking if a task has already been executed. I this example, I will clean-up the packages I want to install first.

I make use of the ansible_facts.services that contains state information of services on the remote machine. However, I have to populate this variable first by calling the Ansible Module service_facts.

- name: Populate Service facts service_facts:

To see the contents of services in service_facts use

- debug: var: ansible_facts.services

Conditionals

I will shortly introduce conditionals in Ansible, using the when-statement.

- name: Stop Jenkins Service

service:

name: jenkins

state: stopped

when: "'jenkins' in ansible_facts.services"

Basically, I only execute a command (Ansible module) if the when-condition is true.

In this case, I only stop the service called jenkins, when I can find the service named jenkins in the ansible_facts.services variable (so the service is up and running). Of course, there are different ways to do this – I found this the most elegant.

Removing previously installed packages

I clean-up I will further un-install previously installed packages, as I will cleanly install them again below. I use this with caution, as it might not necessarily work, as the Linux distribution might have installed some of the packages using different package managers.

- name: Clean-Up previous installation

package:

name: '{{ item }}'

state: absent

loop:

- jenkins

- openjdk-8-jdk

- git

- wget

Remarks about Idempotency

The example above can lead to problems if you use other Ansible Playbooks (or other configuration management tools) on the same machine, or configure the machine manually. Hence, I recommend, only using a single source of truth (way) to configure your remote machine.

Variables

As mentioned above, you make use of conditions in your Playbook. These conditions are often based on the state of variables in Ansible. The following command will list the system variables you can use, such as the Unix distribution and version, network configurations, public keys etc.)

ansible [server-name] -m setup, e.g. ansible web1 -m setup

You can also define your own variables in a Playbook

vars:

jenkins_port: 8080

and access them using the {{}} notation, I mentioned above (for loops)

- name: Allow port {{jenkins_port}}

shell: iptables -I INPUT -p tcp --dport {{jenkins_port}} -m state --state NEW,ESTABLISHED -j ACCEPT

For more on variables, please consult the Ansible documentation.

Running the Playbook

Once you created the Playbook, you can check it with

ansible-playbook --syntax-check jenkins.yml

To find out which servers are affected by the Playbook use

To find out, which hosts are affected by a Playbook, use

ansible-playbook jenkins.yml --list-hosts

If everything is correct, you can run the Playbook with

ansible-playbook jenkins.yml

For a more detailed output, use

ansible-playbook jenkins.yml --verbose

If you want to run several threads use (10 Threads). This is useful to simultaneously run configurations on many different machines, without having to wait for commands to finish on one machine.

ansible-playbook playbook.yml -f 10

You can also limit the subset of servers, on which the Playbook should be executed (the name of the servers have to be included in the hosts-parameter of the Playbook.

ansible-playbook -l web2 jenkins.yml

Please consult the Ansible documentation for more information on how to use ansible-playbook.

Execution Order

Each Task has a name, e.g. “Install Jenkins software”, “Update Software Package Repository” etc. The names are shown when you run a Playbook (especially for debugging purposes).

The tasks in a Playbook are executed in the following order:

- Task 1 on Machine 1

- Task 1 on Machine 2

- …

If one task can’t be executed on a remote machine, the whole Playbook will be excluded from further execution on this machine. This will not affect the other machines.

Calling Jenkins

So now you should be able to call Jenkins in your web browser, using the IP address of the remote machine. You will find the address if you open a secure shell to your machine (in my case ssh vagrant@127.0.0.1 -p2200). You can obtain the IP address by executing ifconfig. In my case, I can access Jenkins calling http://172.28.128.9:8080/ in a web-browser. The rest should be straight-forward.

You can retrieve the initial admin password for Jenkins by logging into the Jenkins server

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

On a side-note – if you don’t always want to type in your ssh password with every login you can store it in the SSH authentication agent.

ssh-add ~/.ssh/id_rsa

Configuring Jenkins

When you search for Jenkins in the Ansible documentation you will find some Ansible Modules that can be used to configure Jenkins. The modules use the Jenkins Remote Access API), so you will configure Jenkins after it is up and running.

- jenkins_plugin to install or remote Jenkins plugins.

- jenkins_job to manage Jenkins jobs.

- jenkins_script to execute a Groovy script in Jenkins

Miscellaneous

Ansible Roles

One strength that will become useful is the use of so-called Ansible Roles. I would define a Role as a reusable set of Playbooks. For example, people already wrote re-usable Ansible Roles to install and configure Jenkins on a host (in a more extensible way I used in this example). I will use Ansibles Roles in a blog-post to follow.

Handlers

Another interesting concept are Ansible Handlers, i.e. if a state changes, a set of tasks can be performed, e.g. if a configuration has changed within an Ansible Playbook, a service is being restarted. Using handlers is a good practice to ensure idempotency.

Tags

Tags are markers on Plays or Tasks that can be explicity executed or omitted when running an Ansible Playbook. I can tag the parts of our Playbook that do the clean-up:

- name: Populate Service facts

service_facts:

tags:

cleanup

- name: Stop Jenkins Service

service:

name: jenkins

state: stopped

when: "'jenkins' in ansible_facts.services"

tags:

cleanup

- name: Clean-Up previous installation

package:

name: '{{ item }}'

state: absent

loop:

- jenkins

- openjdk-8-jdk

- git

- wget

tags:

cleanup

If I only want to call the Ansible Plays tagged with cleanup, I run the Playbook with

ansible-playbook jenkins.yml --tags cleanup

In contrast, if I want to skip the Ansible Plays tagged with cleanup, I run the Playbook with

ansible-playbook jenkins.yml --skip-tags cleanup

Further Remarks

If you have configured systems by executing a set of commands by hand you might have noticed that it can be a tedious work. Especially if you are not familiar with the command shell or Unix In general, you might run into a lot of trial-and-error that leave the system in an inconsistent and possibly insecure state.

Of course, editing and testing a configuration in an Ansible Playbook can also be time-consuming and frustrating. However, by using a Playbook, you have a documented way of how you configured your system. An Ansible Playbook can be written in collaboration with others, it can be reviewed by others and serves as a documentation. In addition, Ansible Playbooks are re-usable. This means I can use a Playbook to configure several environments.

Hence, I highly recommend Ansible or similar tools for configuration (provisioning), such as Chef, SaltStack, Puppet or other configuration management tools.