Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

Blog

-

Hackintosh – The Hardware

Why did I intend to build a Hackintosh?

I highly recommend reading all my Hackintosh posts (and consult other tutorials) before you actually decide to build a Hackintosh. It is a rather tedious task. It might run straight-away. However, in my case – and also in the tutorials, I found on the Internet – it can frustrating at times. When I put into account the hours I spent building an experimental Hackintosh, it might have been less expensive buying an actual Mac from Apple. In addition, with every update, Apple releases you have to put in some extra work.

I use my 13″ MacBook Pro from 2016 (the first one with the touch bar) for software development. It is equipped with 16 GB of RAM and a Dual-Core i5. It has decent performance but still is a bit on the slow side. And with three VMs I’m using I had some serious crashes. Additionally, I mostly use in my home office – so I don’t need the portability of a notebook.

So what are the alternatives – and Apple iMac or iMac Pro? Both come with a built-in screen and I have an external monitor. An Apple Mac Pro? It is far too expensive. An Apple Mac mini? Too expensive and not up to date.

So recently my attention was drawn to some videos about a Hackintosh. I did some more research and to me, it seemed that these days it’s fairly easy to build one from PC hardware, especially if you stick with the hardware used in Apple computers.

Which Hardware did I choose?

I found compatible hardware on tonymacx86’s page and bought the following hardware – I wanted to have a small powerful computer. I know that I could have used other hardware, e.g. an AMD Rizen with more parallel threads which might be more suitable for software development. However, my goal was to have a Hackintosh with as less trouble as possible in the future, so I stuck with the hardware Apple supports.

CPU Core i7-9700K CPU-Cooler Noctua NH-L9i RAM Crucial Ballistix Sport LT 32 GB SSD 970 EVO NVMe SSD, PCIe 3.0 M.2 Typ 2280 1 GB Graphics Card Gigabyte Radeon RX 5700 XT 8GB Case DAN Cases A4-SFX V4.1 Mini-ITX Power Supply Corsair SF Series Platinum SF750 Mainboard Gigabyte Z390 I Aorus Pro WiFi, Intel Z390 While installing the Software I learned that the WLAN / Bluetooth chip on the Gigabyte Z390 is not compatible with Mac OSX. In this case I could either exchange the chip with a Broadcom chipset or, as I am to tired to disabled the whole mainboard again, I went for a Bluetooth dongle and WLAN dongle as a temporary solution.

Bluetooth Dongle GMYLE Bluetooth Adapter Dongle WLAN Dongle TP-Link Archer T2U Nano AC600 I collected reasons for this choice on various websites

How I assembled the Hardware?

The assembly was pretty straightforward – with only minor annoyances. I used some videos from YouTube and the manuals. The DAN case is super-small but so extremely well organised that (almost) everything fits just perfectly. I assembled the parts in this particular order to avoid too much hassle (you only need a Philips screwdriver).

- Motherboard

- CPU (remove the plastic part und insert the CPU)

- RAM

- SSD (it’s under a heat sink)

- CPU Cooler

- Case (remove the side parts and the top part)

- Connector blend (attach it in the back of the case)

- Motherboard (attach it in the case)

- Power Supply (and connect it with the motherboard)

- Short Test with the Internal graphics card (check the bios settings if all the hardware appears)

- Graphics card (in the riser slot and connect it with the power supply)

These videos helped me. I highly recommend watching these videos before you assemble the hardware

Work-in-Progress: The photo was taken before I worked on the cable management. -

Installing Artifactory with Docker and Ansible

The aim of this tutorial is to provision Artifactory stack in Docker on a Virtual Machine using Ansible for the provisioning. I separated the different concerns so that they can be tested, changed and run separately. Hence, I recommend you run the parts separately, as I explain them here, in case you run into some problems.

Prerequisites

On my machine, I created a directory

artifactory-install. I will later push this folder to a git repository. The directory structure inside of this folder will look like this.artifactory-install ├── Vagrantfile ├── artifactory │ ├── docker-compose.yml │ └── nginx-config │ ├── reverse_proxy_ssl.conf │ ├── cert.pem │ └── key.pem ├── artifactory.yml ├── docker-compose.yml └── docker.yml

Please create the subfolders

artifactory(the folder that we will copy to our VM) andnginx-configsubfolder (which contains the nginx-configuration for the reverse-proxy as well as the certificate and key).Installing a Virtual Machine with Vagrant

I use the following Vagrantfile. The details are explained in Vagrant in a Nutshell. You might want to experiment with the virtual box parameters.

agrant.configure("2") do |config| config.vm.define "artifactory" do |web| # Resources for this machine web.vm.provider "virtualbox" do |vb| vb.memory = "2048" vb.cpus = "1" end web.vm.box = "ubuntu/xenial64" web.vm.hostname = "artifactory" # Define public network. If not present, Vagrant will ask. web.vm.network "public_network", bridge: "en0: Wi-Fi (AirPort)" # Disable vagrant ssh and log into machine by ssh web.vm.provision "file", source: "~/.ssh/id_rsa.pub", destination: "~/.ssh/authorized_keys" # Install Python to be able to provision machine using Ansible web.vm.provision "shell", inline: "which python || sudo apt -y install python" end endInstalling Docker

As Artifactory will run as a Docker container, we have to install the docker environment first. In my Playbook (docker.yml), I use the Ansible Role to install Docker and docker-compose from Jeff Geerling. The role variables are explained in the README.md. You might have to adopt this yaml-file, e.g. defining different users etc.

- name: Install Docker hosts: artifactory become: yes become_method: sudo tasks: - name: Install Docker and docker-compose include_role: name: geerlingguy.docker vars: - docker_edition: 'ce' - docker_package_state: present - docker_install_compose: true - docker_compose_version: "1.22.0" - docker_users: - vagrantBefore you run this playbook you have to install the Ansible role

ansible-galaxy install geerlingguy.dockerIn addition, make sure you have added the IP-address of the VM to your Ansible inventory

sudo vi /etc/ansible/hostsThen you can run this Docker Playbook with

ansible-playbook docker.ymlInstalling Artifactory

I will show the Artifactory Playbook (artifactory.yml) first and then we go through the different steps.

- name: Install Docker hosts: artifactory become: yes become_method: sudo tasks: - name: Check is artifactory folder exists stat: path: artifactory register: artifactory_home - name: Clean up docker-compose command: > docker-compose down args: chdir: ./artifactory/ when: artifactory_home.stat.exists - name: Delete artifactory working-dir file: state: absent path: artifactory when: artifactory_home.stat.exists - name: Copy artifactory working-dir synchronize: src: ./artifactory/ dest: artifactory - name: Generate a Self Signed OpenSSL certificate command: > openssl req -subj '/CN=localhost' -x509 -newkey rsa:4096 -nodes -keyout key.pem -out cert.pem -days 365 args: chdir: ./artifactory/nginx-config/ - name: Call docker-compose to run artifactory-stake command: > docker-compose -f docker-compose.yml up -d args: chdir: ./artifactory/Clean-Up a previous Artifactory Installation

As we will see later, the magic happens in the

~/artifactoryfolder on the VM. So first we will clean-up a previous installation, e.g. stopping and removing the running containers. There are different ways to achieve this. I will use adocker-compose down, which will terminate without an error, even if no container is running. In addition, I will delete the artifactory-folder with all subfolders (if they are present).Copy nginx-Configuration and docker-compose.yml

The artifactory-folder includes the

docker-compose.ymlto install the Artifactory stack (see below) and the nginx-configuration (see below). They will be copied in a directory with the same name to the remote host.I use the synchronise module to copy the files, as currently since Python 3.6 there seems to be a problem that doesn’t allow to copy a directory recursively with the copy module. Unfortunately, synchronise demands your SSH password again. There are workarounds that make sense but don’t look elegant to me, so I avoid them ;).

Set-Up nginx Configuration

I will use nginx as a reverse-proxy that also allows a secure connection. The configuration-file is static and located in the nginx-config subfolder (

reverse_proxy_ssl.conf)server { listen 443 ssl; listen 8080; ssl_certificate /etc/nginx/conf.d/cert.pem; ssl_certificate_key /etc/nginx/conf.d/key.pem; location / { proxy_pass http://artifactory:8081; } }The configuration is described in the nginx-docs. You might have to adopt this file for your needs.

The proxy_pass is set to the service-name inside of the Docker overlay-network (as defined in the

docker-compose.yml). I will open port 443 for an SSL connection and 8080 for a non-SSL connection.Create a Self-Signed Certificate

We will create a self-signed certificate on the remote-host inside of the folder nginx-config

openssl req -subj '/CN=localhost' -x509 -newkey rsa:4096 -nodes- keyout key.pem -out cert.pem -days 365The certificate and key are referenced in the

reverse_proxy_ssl.conf, as explained above. You might run into problems, that your browser won’t accept this certificate. A Google search might provide some relief.Run Artifactory

As mentioned above, we will run Artifactory with a reverse-proxy and a PostgreSQL as its datastore.

version: "3" services: postgresql: image: postgres:latest deploy: replicas: 1 restart_policy: condition: on-failure environment: - POSTGRES_DB=artifactory - POSTGRES_USER=artifactory - POSTGRES_PASSWORD=artifactory volumes: - ./data/postgresql:/var/lib/postgresql/data artifactory: image: docker.bintray.io/jfrog/artifactory-oss:latest user: "${UID}:${GID}" deploy: replicas: 1 restart_policy: condition: on-failure environment: - DB_TYPE=postgresql - DB_USER=artifactory - DB_PASSWORD=artifactory volumes: - ./data/artifactory:/var/opt/jfrog/artifactory depends_on: - postgresql nginx: image: nginx:latest deploy: replicas: 1 restart_policy: condition: on-failure volumes: - ./nginx-config:/etc/nginx/conf.d ports: - "443:443" - "8080:8080" depends_on: - artifactory - postgresqlArtifactory

I use the image

artifactory-oss:latestfrom JFrog (as found on JFrog BinTray). On GitHub, you find some examples of how to use the Artifactory Docker image.I am not super satisfied, as out-of-the-box I receive a „Mounted directory must be writable by user ‚artifactory‘ (id 1030)“ error when I bind

/var/opt/jfrog/artifactoryinside of the container to the folder./data/artifactoryon the VM. Inside of the Dockerfile for this image, they use a few tasks with a user „artifactory“. I don’t have such a user on my VM (and don’t want to create one). A workaround seems to be to set the user-id and group-id inside of thedocker-compose.ymlas described here.Alternatively, you can use the Artifactory Docker image from Matt Grüter provided on DockerHub. However, it doesn’t work with PostgreSQL out-of-the-box and you have to use the internal database of Artifactory (Derby). In addition, the latest image from Matt is built on version 3.9.2 (the current version is 6.2.0, 18/08/2018). Hence, you have to build a new image and upload it to your own repository. Sure if we use

docker-composeto deploy our services, we could add a build-segment in thedocker-compose.yml. But if we usedocker stackto run our services, the build-part will be ignored.I do not publish a port (default is 8081) as I want users to access Artifactory only by the reverse-proxy.

PostgreSQL

I use the official PostgreSQL Docker image from DockerHub. The data-volume inside of the container will be bound to the postgresql folder in

~/artifactory/data/postgresqlon the VM. The credentials have to match the credentials for the artifactory-service. I don’t publish a port, as I don’t want to use the database outside of the Docker container.The benefits of using a separate database are when you have intensive usage or a high load on your database, as the embedded database (Derby) might then slow things down.

Nginx

I use Nginx as described above. The custom configuration in

~/artifactory/nginx-config/reverse_proxy_ssl.confis bound to/etc/nginx/conf.dinside of the Docker container. I publish port 443 (SSL) and 8080 (non-SSL) to the world outside of the Docker container.Summary

To get the whole thing started, you have to

- Create a VM (or have some physical or virtual machine where you want to install Artifactory) with Python (as needed by Ansible)

- Register the VM in the Ansible Inventory (

/etc/ansible/hosts) - Start the Ansible Playbook

docker.ymlto install Docker on the VM (as a prerequisite to run Artifactory) - Start the Ansible Playbook

artifactory.ymlto install Artifactory (plus PostgreSQL and a reverse-proxy).

I recommend adopting the different parts for your needs. I am sure you could also improve a lot. Of course, you can include the Ansible Playbooks (

docker.ymlandartifactory.yml) directly in the provision-part of your Vagrantfile. In this case, you have to only runvagrant up.Integrating Artifactory with Maven

This article describes how to configure Maven with Artifactory. In my case, the automatic generation of the

settings.xmlin~/.m2/for Maven didn’t include the encrypted password. You can retrieve the encrypted password, as described here. Make sure you update your Custom Base URL in the General Settings, as it will be used to generate thesettings.xml.Possible Error: Broken Pipe

I ran into an authentification problem when I first tried to deploy a snapshot archive from my project to Artifactory. It appeared when I ran

mvn deployas (use-Xparameter for a more verbose output)Caused by: org.eclipse.aether.transfer.ArtifactTransferException: Could not transfer artifact com.vividbreeze.springboot.rest:demo:jar:0.0.1-20180818.082818-1 from/to central (http://artifactory.intern.vividbreeze.com:8080/artifactory/ext-release-local): Broken pipe (Write failed)

A broken pipe can mean everything, and you will find a lot when you google it. A closer look in the

access.logon the VM running Artifactory revealed an2018-08-18 08:28:19,165 [DENIED LOGIN] for chris/192.168.0.5.

The reason was that I provided a wrong encrypted password (see above) in

~/.m2/settings. You should be aware, that the encrypted password changes everytime you deploy a new version of Artifactory.Possible Error: Request Entity Too Large

Another error I ran into when I deployed a very large jar (this can happen with Spring Boot apps that carry a lot of luggage): Return code is: 413, ReasonPhrase: Request Entity Too Large.

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-deploy-plugin:2.8.2:deploy (default-deploy) on project demo: Failed to deploy artifacts: Could not transfer artifact com.vividbreeze.springboot.rest:demo:jar:0.0.1-20180819.092431-3 from/to snapshots (http://artifactory.intern.vividbreeze.com:8080/artifactory/libs-snapshot): Failed to transfer file: http://artifactory.intern.vividbreeze.com:8080/artifactory/libs-snapshot/com/vividbreeze/springboot/rest/demo/0.0.1-SNAPSHOT/demo-0.0.1-20180819.092431-3.jar. Return code is: 413, ReasonPhrase: Request Entity Too Large. -> [Help 1]

I wasn’t able to find anything in the Artifactory logs, nor the STDIN/ERR of the nginx-container. However, I assumed that there might a limit on the maximum request body size. As the was over 20M large, I added the following line to the

~/artifactory/nginx-config/reverse_proxy_ssl.conf:server { ... client_max_body_size 30M; ... }Further Remarks

So basically you have to run three scripts, to run Artifactory on a VM. Of course, you can add the two playbooks to the provision-part of the Vagrantfile. For the sake of better debugging (something will go probably wrong), I recommend running them separately.

The set-up here is for a local or small team installation of Artifactory, as Vagrant and docker-compose are tools made for development. However, I added a deploy-part in the

docker-compose.yml, so you can easily set up a swarm and run the docker-compose.yml with docker stack without any problems. Instead of Vagrant, you can use Terraform or Apache Mesos or other tools to build an infrastructure in production.To further pre-configure Artifactory, you can use the Artifactory REST API or provide custom configuration files in

artifactory/data/artifactory/etc/. -

Docker Swarm – Multiple Nodes

In the first part of this series, we built a Docker swarm, consisting of just one node (our local machine). The nodes can act as swarm-managers and (or) swarm-workers. Now we want to create a swarm that spans more than one node (one machine).

Creating a Swarm

Creating the Infrastructure

First, we set up a cluster, consisting of Virtual Machines. We have used Vagrant before to create Virtual Machines on our local machine. Here, we will use

docker-machineto create virtual machines on VirtualBox (you should have VirtualBox installed on your computer). docker-machine is a tool to create Docker VMs, however, it should not be used in production, where more configuration of avirtual machineis needed.docker-machine create --driver virtualbox vm-managerdocker-machine create --driver virtualbox vm-worker1docker-machineuses a lightweight Linux distribution (boot2docker) including Docker, that will start within seconds (after the image was downloaded). As an alternative, you might use the alpine2docker Vagrant box.Let us have a look at our virtual machines

docker-machine lsNAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS vm-manager - virtualbox Running tcp://192.168.99.104:2376 v18.06.0-ce vm-worker1 - virtualbox Running tcp://192.168.99.105:2376 v18.06.0-ce

Setting Up the Swarm

As the name suggests, our first vm1 will be a swarm-manager, while the other two machines will be swarm-workers. Let us log into our first Virtual Machine and define it as a swarm-manager

docker-machine ssh vm-managerdocker swarm initYou might run into an error message such as

Error response from daemon: could not choose an IP address to advertise since this system has multiple addresses on different interfaces (10.0.2.15 on eth0 and 192.168.99.104 on eth1) - specify one with --advertise-addr

I initialised the swarm with the local address (192.168.99.104) on eth1. You will get the ip address of a machine, using

docker-machine ip vm-manager(outside of the VM)So now let us try to initialise the swarm again

docker swarm init --advertise-addr 192.168.99.104Swarm initialized: current node (9cnhj2swve2rynyh6fx7h72if) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-2k1c6126hvjkch1ub74gea0hkcr1timpqlcxr5p4msm598xxg7-6fj5ccmlqdjgrw36ll2t3zr2t 192.168.99.104:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.The output displays the command to add a worker to the swarm. So now we can log into our two worker VMs and execute this command to initialise the swarm mode as a worker. You don’t have to open a secure shell on the VM explicitly; you can also execute a command on the VM directly

docker-machine ssh vm-worker1 docker swarm join --token SWMTKN-1-2k1c6126hvjkch1ub74gea0hkcr1timpqlcxr5p4msm598xxg7-6fj5ccmlqdjgrw36ll2t3zr2t 192.168.99.104:2377You should see something like

This node joined a swarm as a worker.

To see if everything ran smoothly, we can list the nodes in our swarm

docker-machine ssh vm-manager docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION 9cnhj2swve2rynyh6fx7h72if * vm-manager Ready Active Leader 18.06.0-ce sfflcyts946pazrr2q9rjh79x vm-worker1 Ready Active 18.06.0-ce

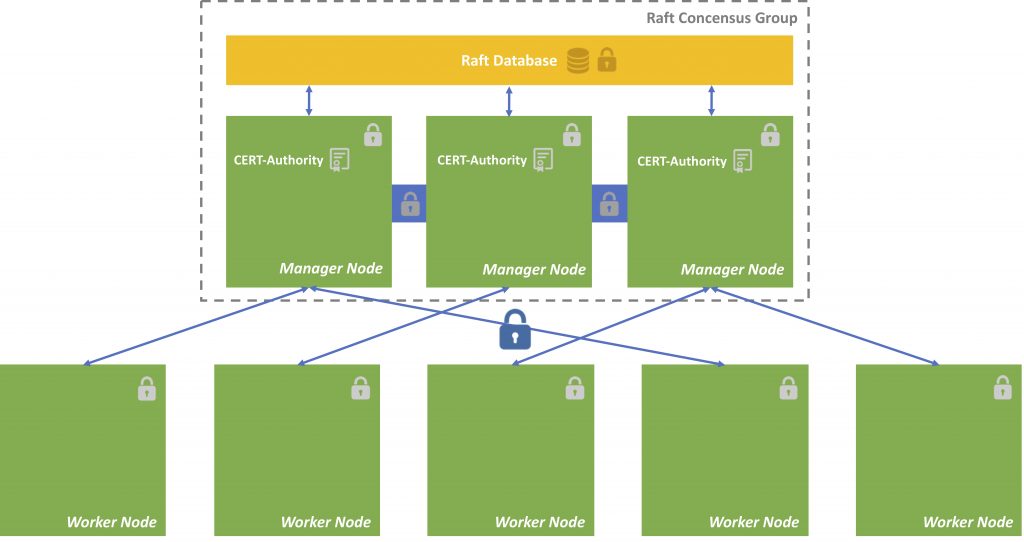

The main difference between a worker node and a manager node is that managers are workers that can control the swarm. The node I invoke swarm init, will become the swarm leader (and manager, by default). There can be several managers, but only one swarm leader.

During initialisation, a root certificate for the swarm is created, a certificate is issued for this first node and the join tokens for new managers and workers are created.

Most of the swarm data is stored in a so Raft-database (such as certificates, configurations etc.). This database is distributed over all manager nodes. A Raft is a consensus algorithm that insists consistency over the manager nodes (Docker implementation of the Raft algorithm). thesecretliveofdata.com provides a brilliant tutorial about the raft algorithm.

Most data is stored encrypted on the nodes. The communication inside of the swarm is encrypted.

Docker Swarm Dictate Docker to run Commands against particular Node

Set environment variables to dictate that

dockershould run a command against a particular machine.Running pre-create checks... Creating machine... (vm-manager) Copying /Users/vividbreeze/.docker/machine/cache/boot2docker.iso to /Users/vividbreeze/.docker/machine/machines/vm-manager/boot2docker.iso... (vm-manager) Creating VirtualBox VM... (vm-manager) Creating SSH key... (vm-manager) Starting the VM... (vm-manager) Check network to re-create if needed... (vm-manager) Waiting for an IP... Waiting for machine to be running, this may take a few minutes... Detecting operating system of created instance... Waiting for SSH to be available... Detecting the provisioner... Provisioning with boot2docker... Copying certs to the local machine directory... Copying certs to the remote machine... Setting Docker configuration on the remote daemon... Checking connection to Docker... Docker is up and running! To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env vm-manager

The last line tells you, how to connect your client (your machine), to the virtual machine you just created

docker-machine env vm-managerexports the necessary docker environment variables with the values for the VM vm-manager

export DOCKER_TLS_VERIFY="1" export DOCKER_HOST="tcp://192.168.99.109:2376" export DOCKER_CERT_PATH="/Users/vividbreeze/.docker/machine/machines/vm-manager" export DOCKER_MACHINE_NAME="vm-manager" # Run this command to configure your shell: # eval $(docker-machine env vm-manager)

eval $(docker-machine env vm-manager)dictates docker, to run all commands against vm-manager, e.g. the above

docker node ls, ordocker image lsetc. will also run against the VM vm-manager.So now I can use

docker node lsdirectly, to list the nodes in my swarm, as all docker commands now run against Docker on vm-manager (before I had to usedocker-machine ssh vm-manager docker node ls).To reverse this command use

docker-machine env -uand subsequentlyeval $(docker-machine env -u).Deploying the Application

Now we can use the example from part I of the tutorial. Here is a copy of my

docker-compose.yml, so you can follow this example (I increase the number of replicas from 3 to 5).version: "3" services: dataservice: image: vividbreeze/docker-tutorial:version1 deploy: replicas: 5 restart_policy: condition: on-failure ports: - "4000:8080"Let us deploy our service as describe in

docker-compose.ymland name it dataservicedocker stack deploy -c docker-compose.yml dataappCreating network dataapp_default Creating service dataapp_dataservice

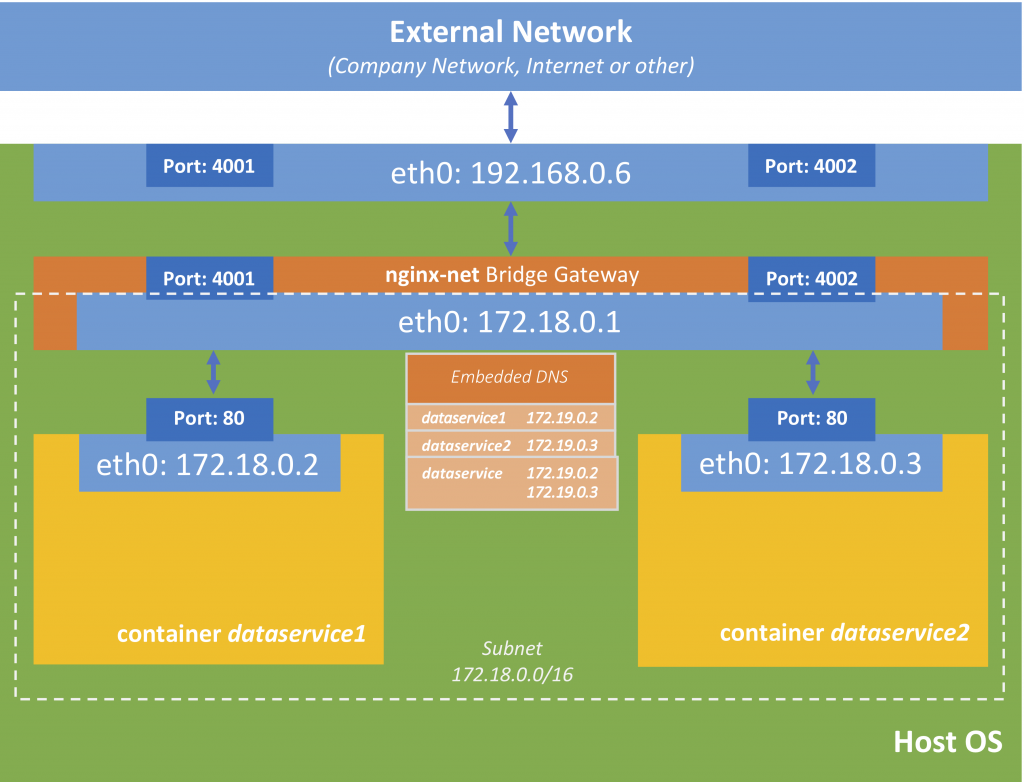

Docker created a new service, called

dataapp_dataserviceand a network calleddataapp_default. The network is a private network for the services that belong to the swarm to communicate with each other. We will have a closer look at networking in the next tutorial. So far nothing new as it seems.Let us have a closer look at our dataservice

docker stack ps dataappID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS s79brivew6ye dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running less than a second ago gn3sncfbzc2s dataapp_dataservice.2 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running less than a second ago wg5184iug130 dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running less than a second ago i4a90y3hd6i6 dataapp_dataservice.4 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running less than a second ago b4prawsu81mu dataapp_dataservice.5 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running less than a second ago

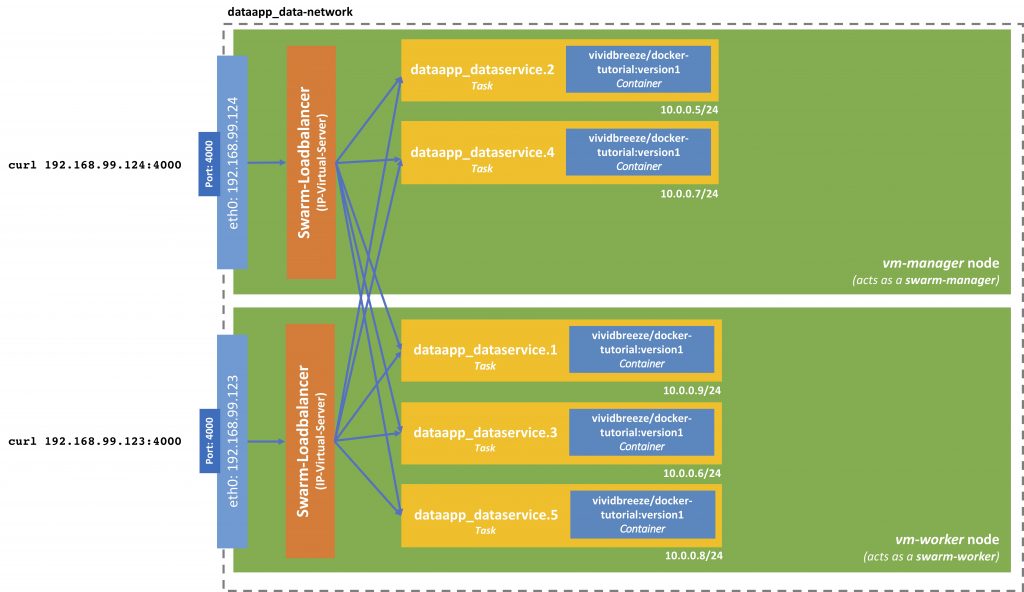

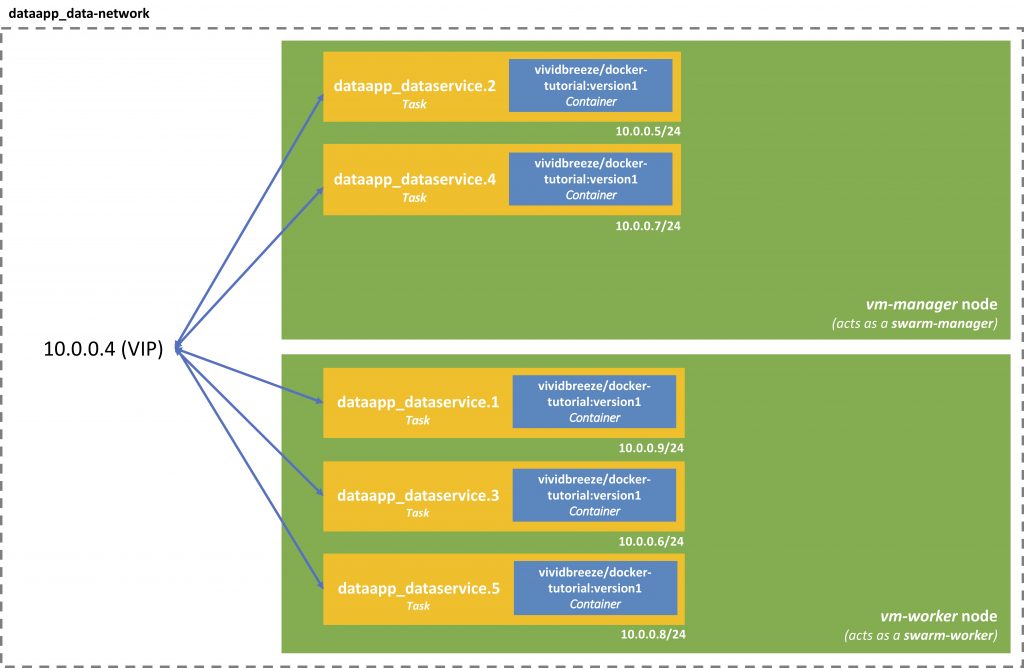

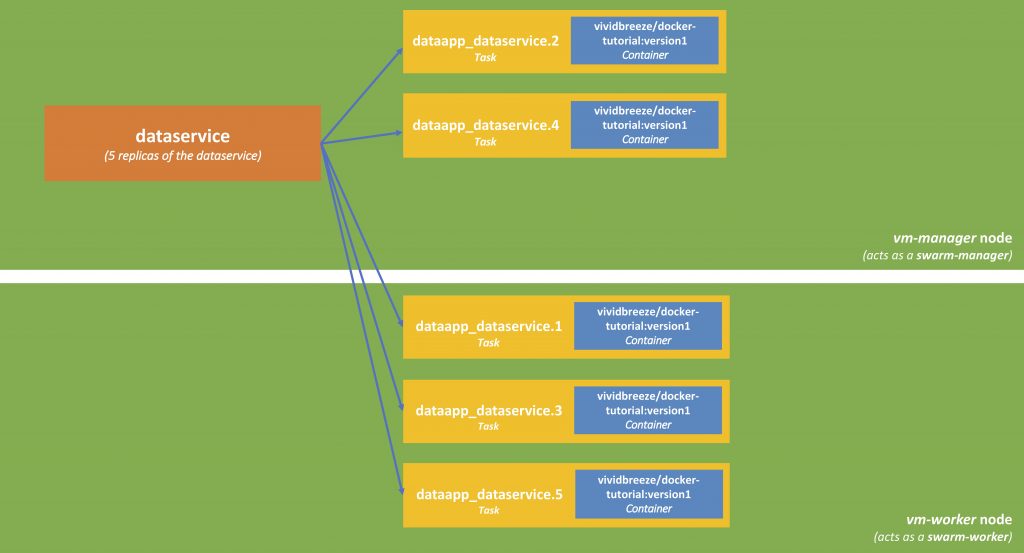

As you can see, the load was distributed to both VMs, no matter which role they have (swarm manager or swarm worker).

The requests can now go either against the IP of the VM manager or the VM worker. You can obtain its IP-address of the vm-manager with

docker-machine ip vm-managerNow let us fire 10 requests against vm-manager to see if our service works

repeat 10 { curl 192.168.99.109:4000; echo }<?xml version="1.0" ?><result><name>hello</name><id>139</id></result> <?xml version="1.0" ?><result><name>hello</name><id>3846</id></result> <?xml version="1.0" ?><result><name>hello</name><id>149</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2646</id></result> <?xml version="1.0" ?><result><name>hello</name><id>847</id></result> <?xml version="1.0" ?><result><name>hello</name><id>139</id></result> <?xml version="1.0" ?><result><name>hello</name><id>3846</id></result> <?xml version="1.0" ?><result><name>hello</name><id>149</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2646</id></result> <?xml version="1.0" ?><result><name>hello</name><id>847</id></result>

If everything is working, we should see five different kinds of responses (as five items were deployed in our swarm). The following picture describes shows how services are deployed.

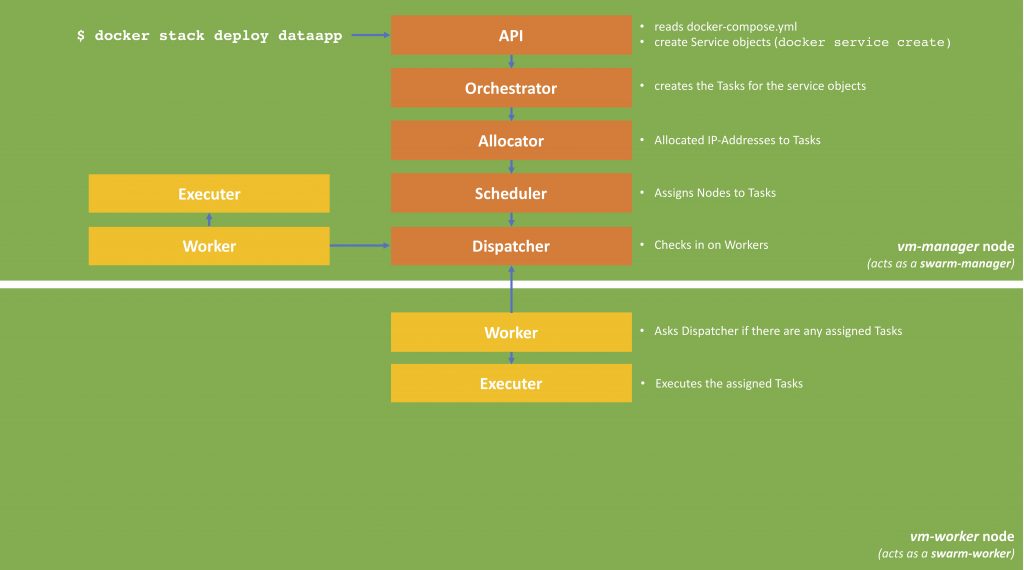

Docker Stack Deployment Process When we call

docker stack deploy, the command transfers thedocker-compose.ymlto the swarm manager, the manager creates the services and deploys it to the swarm (as defined in thedeploy-partof thedocker-compose.yml). Each of the replicas (in our case 5) is called a task. Each task can be deployed on one or nodes (basically the container with the service-image is started on this node); this can be on a swarm-manager or swarm-worker. The result is depicted in the next picture.

Docker Service Deployment Managing the Swarm

Dealing with a Crashed Node

In the last tutorial, we defined a restart-policy, so the swarm-manger will automatically start a new container, in case one crashes. Let us now see what happens when we remote our worker

docker-machine rm vm-worker1We can see that while the server (vm-worker1) is shutting down, new tasks are created on the vm-manager

> docker stack ps dataservice ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS vc26y4375kbt dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 vm-manager Ready Ready less than a second ago kro6i06ljtk6 \_ dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 vm-worker1 Shutdown Running 12 minutes ago ugw5zuvdatgp dataapp_dataservice.2 vividbreeze/docker-tutorial:version1 vm-manager Running Running 12 minutes ago u8pi3o4jn90p dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 vm-manager Ready Ready less than a second ago hqp9to9puy6q \_ dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 vm-worker1 Shutdown Running 12 minutes ago iqwpetbr9qv2 dataapp_dataservice.4 vividbreeze/docker-tutorial:version1 vm-manager Running Running 12 minutes ago koiy3euv9g4h dataapp_dataservice.5 vividbreeze/docker-tutorial:version1 vm-manager Ready Ready less than a second ago va66g4n3kwb5 \_ dataapp_dataservice.5 vividbreeze/docker-tutorial:version1 vm-worker1 Shutdown Running 12 minutes ago

A moment later you will see all 5 tasks running up again.

Dealing with Increased Workload in a Swarm

Increasing the number of Nodes

Let us now assume that the workload on our dataservice is growing rapidly. Firstly, we can distribute the load to more VMs.

docker-machine create --driver virtualbox vm-worker2docker-machine create --driver virtualbox vm-worker3In case we forgot the token that is necessary to join our swarm as a worker, use

docker swarm join-token workerNow let us add our two new machines to the swarm

docker-machine ssh vm-worker2 docker swarm join --token SWMTKN-1-371xs3hz1yi8vfoxutr01qufa5e4kyy0k1c6fvix4k62iq8l2h-969rhkw0pnwc2ahhnblm4ic1m 192.168.99.109:2377docker-machine ssh vm-worker3 docker swarm join --token SWMTKN-1-371xs3hz1yi8vfoxutr01qufa5e4kyy0k1c6fvix4k62iq8l2h-969rhkw0pnwc2ahhnblm4ic1m 192.168.99.109:2377As you can see when using

docker stack ps dataapp, the tasks were not automatically deployed to the new VMs.ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS 0rp9mk0ocil2 dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 vm-manager Running Running 18 minutes ago 3gju7xv20ktr dataapp_dataservice.2 vividbreeze/docker-tutorial:version1 vm-manager Running Running 13 minutes ago wwi72sji3k6v dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 vm-manager Running Running 13 minutes ago of5uketh1dbk dataapp_dataservice.4 vividbreeze/docker-tutorial:version1 vm-manager Running Running 13 minutes ago xzmnmjnpyxmc dataapp_dataservice.5 vividbreeze/docker-tutorial:version1 vm-manager Running Running 13 minutes ago

The swarm-manager decides when to utilise new nodes. Its main priority is to avoid disruption of running services (even when they are idle). Of course, you can always force an update, which might take some time

docker service update dataapp_dataservice -fIncreasing the number of Replicas

In addition, we can also increase the number of replicas in our

docker-compose.xmlversion: "3" services: dataservice: image: vividbreeze/docker-tutorial:version1 deploy: replicas: 10 restart_policy: condition: on-failure ports: - "4000:8080"Now run

docker stack deploy -c docker-compose.yml dataservicedocker stack ps dataappshows that 5 new tasks (and respectively containers) have been created. Now the swarm-manager has utilised the new VMs.D NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS 0rp9mk0ocil2 dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 vm-manager Running Running 20 minutes ago 3gju7xv20ktr dataapp_dataservice.2 vividbreeze/docker-tutorial:version1 vm-manager Running Running 15 minutes ago wwi72sji3k6v dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 vm-manager Running Running 15 minutes ago of5uketh1dbk dataapp_dataservice.4 vividbreeze/docker-tutorial:version1 vm-manager Running Running 15 minutes ago xzmnmjnpyxmc dataapp_dataservice.5 vividbreeze/docker-tutorial:version1 vm-manager Running Running 15 minutes ago qn1ilzk57dhw dataapp_dataservice.6 vividbreeze/docker-tutorial:version1 vm-worker3 Running Preparing 3 seconds ago 5eyq0t8fqr2y dataapp_dataservice.7 vividbreeze/docker-tutorial:version1 vm-worker3 Running Preparing 3 seconds ago txvf6yaq6v3i dataapp_dataservice.8 vividbreeze/docker-tutorial:version1 vm-worker2 Running Preparing 3 seconds ago b2y3o5iwxmx1 dataapp_dataservice.9 vividbreeze/docker-tutorial:version1 vm-worker3 Running Preparing 3 seconds ago rpov7buw9czc dataapp_dataservice.10 vividbreeze/docker-tutorial:version1 vm-worker3 Running Preparing 3 seconds ago

Further Remarks

To summarise

- Use

docker-machine(as an alternative to vagrant or others) to create VMs running Docker. - Use

docker swarmto define a cluster that can run your application. The cluster can span physical machines and virtual machines (also in clouds). A machine can either be a manager or a worker. - Define your application in a

docker-compose.yml. - Use

docker stackto deploy your application in the swarm.

This again was a simple, pretty straightforward example. You can easily use

docker-machineto create VMs in AWS E2 or Google Compute Engine and cloud services. Use this script to quickly install Docker on a VM.A quick note, please always be aware on which machine you are working. You can easily get the Docker CLI to run against a different machine with

docker-machine env vm-manager. To reverse this command usedocker-machine env -u. - Use

-

Docker Swarm – Single Node

In the previous tutorial, we created one small service, and let it run in an isolated Docker container. In reality, your application might consist of many of different services. An e-commerce application encompasses services to register new customers, search for products, list products, show recommendations and so on. These services might even exist more than one time when they are heavily requested. So an application can be seen as a composition of different services (that run in containers).

In this first part of the tutorial, we will work with the simple application of the Docker Basics Tutorial, that contains only one service. We will deploy this service more than one time and let run on only one machine. In part II we will scale this application over many machines.

Prerequisites

Before we start, you should have completed the first part of the tutorial series. As a result, you should an image uploaded to the DockerHub registry. In my case, the image name is

vividbreeze/docker-tutorial:version1.Docker Swarm

As mentioned above, a real-world application consists of many containers spread over different hosts. Many hosts can be grouped to a so-called swarm (mainly hosts that run Docker in swarm-mode). A swarm is managed by one or more swarm managers and consists of one or many workers. Before we continue, we have to initial a swarm on our machine.

docker swarm initSwarm initialized: current node (pnb2698sy8gw3c82whvwcrd77) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-39y3w3x0iiqppn57pf2hnrtoj867m992xd9fqkd4c3p83xtej0-9mpv98zins5l0ts8j62ociz4w 192.168.65.3:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.The swarm was initialised with one node (our machine) as a swarm manager.

Docker Stack

We now have to design our application. We do this in a file called

docker-compose.yml. So far, we have just developed one service, and that runs inside one Docker container. In this part of the tutorial, our application will only consist of one service. Now let us assume this service is heavily used and we want to scale it.version: "3" services: dataservice: image: vividbreeze/docker-tutorial:version1 deploy: replicas: 3 ports: - "4000:8080"The file contains the name of our service and the number of instances (or replicas) that should be deployed. We now do the port mapping here. The port 8080 that is used by the service inside of our container will be mapped to the port 4000 on our host.

To create our application use (you have to invoke this command from the vm-manager node)

docker stack deploy -c docker-compose.yml dataappCreating network dataapp_default Creating service dataapp_dataservice

Docker now has created a network dataservice_web and a network dataservice_webnet. We will come to networking in the last part of this tutorial. By „stack“, Docker means a stack of (scaled) services that together form an application. A stack can be deployed on one swarm. It has to be called from a Swarm manager.

Let us now have a look, of how many containers were created

docker container lsONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES bb18e9d71530 vividbreeze/docker-tutorial:version1 "java DataService" Less than a second ago Up 8 seconds dataapp_dataservice.3.whaxlg53wxugsrw292l19gm2b 441fb80b9476 vividbreeze/docker-tutorial:version1 "java DataService" Less than a second ago Up 7 seconds dataapp_dataservice.4.93x7ma6xinyde9jhearn8hjav 512eedb2ac63 vividbreeze/docker-tutorial:version1 "java DataService" Less than a second ago Up 6 seconds dataapp_dataservice.1.v1o32qvqu75ipm8sra76btfo6

In Docker terminology, each of these containers is called a task. Now each container cannot be accessed directly through the localhost and the port (they have no port), but through a manager, that listens to port 4000 on the localhost. These five containers, containing the same service, are bundled together and appear as one service. This service is listed by using

docker service lsID NAME MODE REPLICAS IMAGE PORTS zfbbxn0rgksx dataapp_dataservice replicated 5/5 vividbreeze/docker-tutorial:version1 *:4000->8080/tcp

You can see the tasks (containers) that belong to this services with

docker service ps dataservice_webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS lmw0gnxcs57o dataapp_dataservice.1 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running 13 minutes ago fozpqkmrmsb3 dataapp_dataservice.2 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running 13 minutes ago gc6dccwxw53f dataapp_dataservice.3 vividbreeze/docker-tutorial:version1 linuxkit-025000000001 Running Running 13 minutes ago

Now let us call the service 10 times

repeat 10 { curl localhost:4000; echo }(zsh)

for ((n=0;n<10;n++)); do curl localhost:4000; echo; done(bash)<?xml version="1.0" ?><result><name>hello</name><id>2925</id></result> <?xml version="1.0" ?><result><name>hello</name><id>1624</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2515</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2925</id></result> <?xml version="1.0" ?><result><name>hello</name><id>1624</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2515</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2925</id></result> <?xml version="1.0" ?><result><name>hello</name><id>1624</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2515</id></result> <?xml version="1.0" ?><result><name>hello</name><id>2925</id></result>

Now you can see, that our service is called ten times, each time one of the services running inside of the containers were used to handle the request (you see three different ids). The service manager (dataservice-web) acts as a load-balancer. In this case, the load balancer uses a round-robin strategy.

To sum it up, in the

docker-compose.yml, we defined our desired state (3 replicas of one service). Docker tries to maintain this desired state using the resources that are available. In our case, one host (one node). A swarm-manager manages the service, including the containers, we have just created. The service can be reached at port 4000 on the localhost.Restart Policy

This can be useful for updating the number of replicas or changing other parameters. Let us play with some of the parameters. Let us add a restart policy to our

docker-compose.ymlversion: "3" services: dataservice: image: vividbreeze/docker-tutorial:version1 deploy: replicas: 3 restart_policy: condition: on-failure ports: - "4000:8080"and update our configuration

docker stack deploy -c docker-compose.yml dataappLet us now call our service again 3 times to memorise the ids

repeat 3 { curl localhost:4000; echo } <?xml version="1.0" ?><result><name>hello</name><id>713</id></result> <?xml version="1.0" ?><result><name>hello</name><id>1157</id></result> <?xml version="1.0" ?><result><name>hello</name><id>3494</id></result>Now let us get the names of our containers

docker container ls -f "name=dataservice_web" CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 953e010ab4e5 vividbreeze/docker-tutorial:version1 "java DataService" 15 minutes ago Up 15 minutes dataapp_dataservice.1.pb0r4rkr8wzacitgzfwr5fcs7 f732ffccfdad vividbreeze/docker-tutorial:version1 "java DataService" 15 minutes ago Up 15 minutes dataapp_dataservice.3.rk7seglslg66cegt6nrehzhzi 8fb716ef0091 vividbreeze/docker-tutorial:version1 "java DataService" 15 minutes ago Up 15 minutes datasapp_dataservice.2.0mdkfpjxpldnezcqvc7gcibs8

Now let us kill one of these containers, to see if our manager will start a new one again

docker container rm -f 953e010ab4e5It may take a few seconds, but then you will see a newly created container created by the swarm manager (the container-id of the first container is now different).

docker container ls -f "name=dataservice_web" CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES bc8b6fa861be vividbreeze/docker-tutorial:version1 "java DataService" 53 seconds ago Up 48 seconds dataapp_dataservice.1.5aminmnu9fx8qnbzoklfbzyj5 f732ffccfdad vividbreeze/docker-tutorial:version1 "java DataService" 17 minutes ago Up 17 minutes dataapp_dataservice.3.rk7seglslg66cegt6nrehzhzi 8fb716ef0091 vividbreeze/docker-tutorial:version1 "java DataService" 18 minutes ago Up 17 minutes dataapp_datavervice.2.0mdkfpjxpldnezcqvc7gcibs8

The id in the response of one of the replicas of the service has changed

repeat 3 { curl localhost:4000; echo } <?xml version="1.0" ?><result><name>hello</name><id>2701</id></result> <?xml version="1.0" ?><result><name>hello</name><id>1157</id></result> <?xml version="1.0" ?><result><name>hello</name><id>3494</id></result>Resources Allocation

You can also change the resources, such as CPU-time and memory that will be allocated to a service

... image: vividbreeze/docker-tutorial:version1 deploy: replicas: 3 restart_policy: condition: on-failure resources: limits: cpus: '0.50' memory: 10M reservations: cpus: '0.25' memory: 5M ...The service will be allocated to at most 50% CPU-time and 50 MBytes of memory, and at least 25% CPU-time and 5 MBytes of memory.

Docker Compose

Instead of

docker stack, you can also usedocker-compose.docker-composeis a program, written in Python, that does the container orchestration for you on a local machine, e.g. it ignores the deploy-part in thedocker-compose.yml.However,

docker-composeuses some nice debugging and clean-up functionality, e.g. if you start our application withdocker-compose -f docker-compose.yml up

you will see the logs of all services (we only have one at the moment) colour-coded in your terminal window.

WARNING: Some services (web) use the 'deploy' key, which will be ignored. Compose does not support 'deploy' configuration - use `docker stack deploy` to deploy to a swarm. WARNING: The Docker Engine you're using is running in swarm mode. Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node. To deploy your application across the swarm, use `docker stack deploy`. Creating network "docker_default" with the default driver Pulling web (vividbreeze/docker-tutorial:version1)... version1: Pulling from vividbreeze/docker-tutorial Digest: sha256:39e30edf7fa8fcb15112f861ff6908c17d514af3f9fcbccf78111bc811bc063d Status: Downloaded newer image for vividbreeze/docker-tutorial:version1 Creating docker_web_1 ... done Attaching to docker_web_1

You can see in the warning, that the deploy part of your

docker-compose.ymlis ignored, asdocker-composefocusses on the composition of services on your local machine, and not across a swarm.If you want to clean up (containers, volumes, networks and other) just use

docker-compose downdocker-composealso allows you to build your images (docker stackwon’t) in case it hasn’t been built before, e.g.build: context: . dockerfile: Dockerfile.NewDataservice image: dataserviceNew

You might notice on the output of many commands, that docker-compose is different from the Docker commands. So again, use

docker-composeonly for Docker deployments on one host or to verify adocker-compose.ymlon your local machine before using it in production.Further Remarks

To summarise

- Use

docker swarmto define a cluster that runs our application. In our case the swarm consisted only of one machine (no real cluster). In the next part of the tutorial, we will see that a cluster can span various physical and virtual machines. - Define your application in a

docker-compose.yml. - Use

docker stackto deploy your application in the swarm in a production environment ordocker-composeto test and deploy your application in a development environment.

Of course, there is more to Services, than I explained in this tutorial. However, I hope it helped as a starting point to go into more depth.

- Use

-

Docker Basics – Part II

In this previous introduction, I explained the Docker basics. Now we will write a small web-service and deploy it inside of a Docker container.

Prerequisites

You should have Docker (community edition) installed on the machine you are working with. As I will use Java code, you should also have Java 8 (JDK) locally installed. In addition, please create a free account at DockerHub.

For the first steps, we will use this simple web service (DataService.java), that returns a short message and an id (random integer). This code is kept simple on purpose.

import javax.xml.transform.Source; import javax.xml.transform.stream.StreamSource; import javax.xml.ws.Endpoint; import javax.xml.ws.Provider; import javax.xml.ws.WebServiceProvider; import javax.xml.ws.http.HTTPBinding; import java.io.StringReader; @WebServiceProvider public class DataService implements Provider<Source> { public static int RANDOM_ID = (int) (Math.random() * 5000); public static String SERVER_URL = "http://0.0.0.0:8080/"; public Source invoke(Source request) { return new StreamSource(new StringReader("<result><name>hello</name><id>" + RANDOM_ID + "</id></result>")); } public static void main(String[] args) throws InterruptedException { Endpoint.create(HTTPBinding.HTTP_BINDING, new DataService()).publish(SERVER_URL); Thread.sleep(Long.MAX_VALUE); } }The web service listens to 0.0.0.0 (all IP4 addresses on the local machine) as we use this class inside a Docker container. If we would use 127.0.0.1 instead (the local interface inside of the container) requests from outside the request would not be handled.

To use the service, compile the source code by using

javac DataService.javaThen run our service with

java DataServiceThe service should return a result when called

curl localhost:8080<?xml version="1.0" ?><result><name>hello</name><id>2801</id></result>%

The Docker Image

Now we want to run this service inside a Docker Container. Firstly, we have to build the Docker image. Therefore we will create a so-called Dockerfile with the following content.

FROM openjdk:8 COPY ./DataService.class /usr/services/dataservice/ WORKDIR /usr/services/dataservice CMD ["java", "DataService"]

FROM openjdk:8loads the base image from Docker Hub and runs it.COPY ./DataService.class /usr/services/dataservice/copies our bytecode to a working directory inside of the container.WORKDIR /usr/services/dataservicesets this folder as our working directory for all our subsequent commands.CMD ["java", "DataService"]executes the java-command with our DataService binary code.

Based on this image description, we now build our images tagged with the name

dataservice-imagedocker build -t dataservice-image .Sending build context to Docker daemon 580.1kB Step 1/4 : FROM openjdk:8-alpine 8-alpine: Pulling from library/openjdk 8e3ba11ec2a2: Pull complete 311ad0da4533: Pull complete df312c74ce16: Pull complete Digest: sha256:1fd5a77d82536c88486e526da26ae79b6cd8a14006eb3da3a25eb8d2d682ccd6 Status: Downloaded newer image for openjdk:8-alpine ---> 5801f7d008e5 Step 2/4 : COPY ./DataService.class /usr/src/dataservice/ ---> e539c7ae3991 Step 3/4 : WORKDIR /usr/src/dataservice Removing intermediate container 51e2909dd1b5 ---> 8e290a1cc598 Step 4/4 : CMD ["java", "DataService"] ---> Running in f31bba4d05de Removing intermediate container f31bba4d05de ---> 25176dbe486b Successfully built 25176dbe486b Successfully tagged dataservice-image:latest

When Docker builds the image, you will see the different steps as well as a hash-code of the command. When you rebuild the image, Docker uses this hash-code to look for any changes. Docker will then only execute the command (and all subsequent commands) when the command has changed. Let us try this

docker build -t dataservice-image .Sending build context to Docker daemon 580.1kB Step 1/4 : FROM openjdk:8-alpine ---> 5801f7d008e5 Step 2/4 : COPY ./DataService.class /usr/src/dataservice/ ---> Using cache ---> e539c7ae3991 Step 3/4 : WORKDIR /usr/src/dataservice ---> Using cache ---> 8e290a1cc598 Step 4/4 : CMD ["java", "DataService"] ---> Using cache ---> 25176dbe486b Successfully built 25176dbe486b Successfully tagged dataservice-image:latest

As nothing has changed, Docker uses the cached results and hence can build the image in less than a second. If we e.g. change step 2, all subsequent steps will run again.

Our image should now appear in the list of Docker images on our computer

docker images lsREPOSITORY TAG IMAGE ID CREATED SIZE dataservice-image latest d99051bca1c7 4 hours ago 624MB openjdk 8 8c80ddf988c8 3 weeks ago 624MB

I highly recommend giving the image a name (

-t [name]option) or a name and a tag (-t [name]:[tag]), as you are able to later reference the image by its name instead of its id.Docker stores these image on your localhost, e.g. at

/var/lib/docker/graph/<id>(Debian), or/Users/[user-name]/Library/Containers/com.docker.docker/Dataon a Mac.Other commands regarding the image that might become useful are

# get details information about the image (good for debugging) docker image inspect dataservice-image # delete an image docker image rm dataservice-image # remove all images docker image rm $(docker image ls -a -q) # remove all images (with force) docker image rm -f $(docker image ls -a -q) # show all docker image commands docker image --help

Running the Container

Now that we build our image, we can finally run a container with our image

docker run --name dataservice-container -d -p4000:8080 dataservice-imageThe

-doption stands for daemon, i.e. the container runs as a background process. The-poption maps the container-internal port 8080, to a port on your local machine that runs docker (4000). The--nameoption gives the container a name, by which it can be referenced when usingdocker containercommands.You should now see the container in the list of all containers with

docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 4373e2dbcaee dataservice-image "java DataService" 3 minutes ago Up 4 minutes 0.0.0.0:4000->8080/tcp dataservice-container

If you don’t use the

--nameoption, Docker will generate a name for you, e.g.CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 4373e2dbcaee dataservice-image "java DataService" 3 minutes ago Up 4 minutes 0.0.0.0:4000->8080/tcp distracted_hopper

Now let us call our service that resides inside the container with

curl localhost:4000<?xml version="1.0" ?><result><name>hello</name><id>1842</id></result>

You can stop the container from running with

docker container stop dataservice-containerThe container now won’t appear when using

docker container ls, as this command only shows the running containers. Usedocker container ls -ainstead.Other useful commands for Docker containers are

# kills a container (stop - is a graceful shutdown (SIGTERM), while kill will kill the process inside the container (SIGKILL)) docker container dataservice-container # removes a container, even if it is running docker container rm dataservice-container # removes all containers docker container rm $(docker container ls -a -q) # show STDOUT and STDERR from inside your container docker logs --follow # execute a command from inside your container docker exec -it dataservice-container [command] # log into your container docker exec -it dataservice-container bash # shows a list of other docker container commands docker container --help

Publish your Image on DockerHub

So now that we have successfully created and run our first Docker Image, we can upload this image to a registry (in this case DockerHub) to use it from other machines.

Before we continue, I want to clarify the terms registry and repository, as well as the name-space that is used to identify an image. These terms are used a bit differently, than in other contexts (at least in my opinion) which might lead to confusion.

The Docker image itself represents a repository, similar to a repository in git. This repository can only exist local (as until now), or you can host it on a remote host (similar to GitHub or Bitbucket for git repositories). Services, that host repositories are called registries. GitHub or Bitbucket are registries that host git repositories. DockerHub, Artifactory or AWS Elastic Container Registry are registries that host Docker repositories (aka Docker images).

An image name is defined by a repository-name and a tag. The tag identifies the version of the image (such as 1.2.3 or latest). So the repository can contain different versions of the image.

Before we can push our Docker image to a registry (in this case DockerHub) we have to tag our image (this might seem a bit odd).

docker tag [image-name|image-id] [DockerHub user-name]/[repository-name]:[tag]docker tag dataservice-image vividbreeze/docker-tutorial:version1Now let us have a look at our images

docker image lsREPOSITORY TAG IMAGE ID CREATED SIZE dataservice-image latest 61fe8918855a 10 minutes ago 624MB vividbreeze/docker-tutorial version1 61fe8918855a 10 minutes ago 624MB openjdk 8 8c80ddf988c8 3 weeks ago 624MB

You see the tagged-repository is listed with the same image-id. So both repository names are identifiers, that point to the same image. If you want to delete this link you have to use

docker image rm vividbreeze/docker-tutorial:version1Next, we have to log in your remote registry (DockerHub)

docker loginNow we can push the image to DockerHub

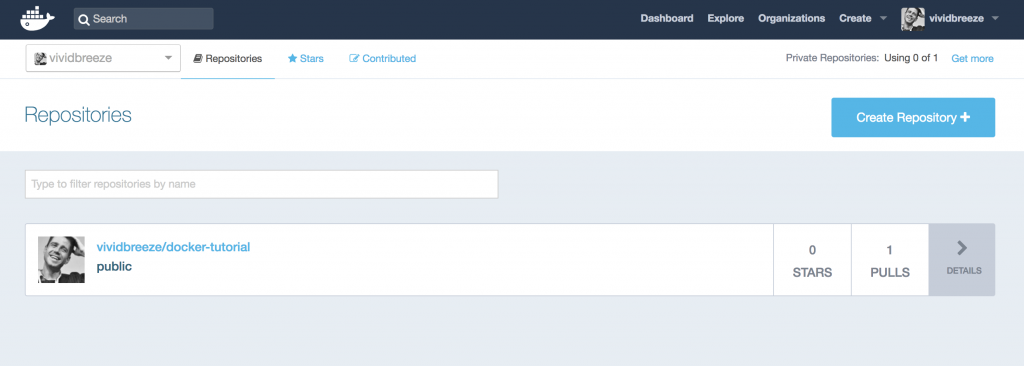

docker push vividbreeze/docker-tutorial:version1If you log into DockerHub you should see the image (repository) (sometimes it might take a while before it appears in the list).

DockerHub Dashboard To run this image use

docker run --name docker-container -d -p4000:8080 vividbreeze/docker-tutorial:version1If the image is not present (which it now is), Docker would download the image from DockerHub before it runs it. You can try this if you delete the image and run the command above. You can also use this command from every other machine that runs docker.

So basically the command above downloads the image with the name vividbreeze/docker-tutorial and the tag version1 from your DockerHub registry.

You might notice that this image is very large (about 626 MB)

docker image inspect vividbreeze/docker-tutorial:version1 | grep SizeOn DockerHub you will see that even the compressed size is 246 MB. The reason is, that the Java8 base-image and the images the base-image is based on are included in our image. You can decrease the size by using a smaller base-image, e.g. openjdk:8-alpine, which is based on Alpine Linux. In this case, the size of the image is only about 102 MB.

Troubleshooting

If you run into problems, I recommend having a look at the logs inside of your Docker container. You can access the messages that are written to

STDOUTandSTDERRwithdocker logsOften you can see if the start of your application has already failed or the call of a particular service. You can use the

--followoption to continuously follow the log.Next, I suggest logging into the Docker container to see if your service is running

docker exec -it dataservice-container bash(you can call any Unix command inside of your container

docker exec -it [container-name] [command]). In our example, trycurl 127.0.0.1:8080. If the service is running correctly inside of the container, but you have problems calling the service from the outside, there might be a network problem, e.g. the port-mapping was wrong, the port is already in use etc. You can see the port-mapping withdocker port docker-container.However, you should make sure that the commands you execute in the container are available, e.g. if you use a small image, such as the alpine-Linux distribution, commands such as

bashorcurlwon’t be available. So you have to useshinstead.Another (sub-)command that is useful with (almost) all docker commands is

inspect. It gives you all the information about an image, a container, a volume etc. that are available, such as mapped volumes, network information, environment variables etc.docker image inspect dataservice-imagedocker container inspect dataservice-containerOf course, there is more, but this basic knowledge should be sufficient to find the root cause of some problems you might run into.

-

Docker Basics – Part I

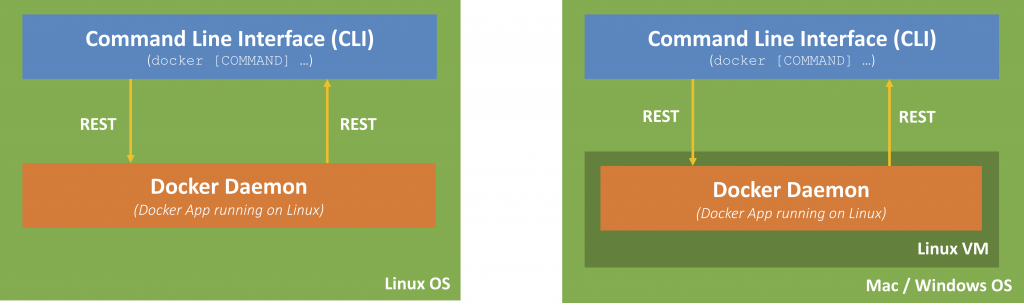

The Docker Eco-System

When we talk about Docker, we mainly talk about the Docker daemon, a process that runs on a host machine (in this tutorial most probably your computer). This Docker daemon is able to build images, run containers provide a network infrastructure and much more. The Docker daemon can be accessed via a REST interface. Docker provides a Command Line Interface (CLI), so you can access the Docker daemon directly from the command shell on the Docker host (

docker COMMAND […|).

Docker Ecosystem Docker is based on a Linux ecosystem. On a Mac or Windows, Docker will create a Virtual Machine (using HyperKit). The Docker daemon runs on this Virtual Machine. The CLI on your host will then communicate via the REST interface to the daemon on this Virtual Machine. Although you might not notice it, you should keep this in mind (see below).

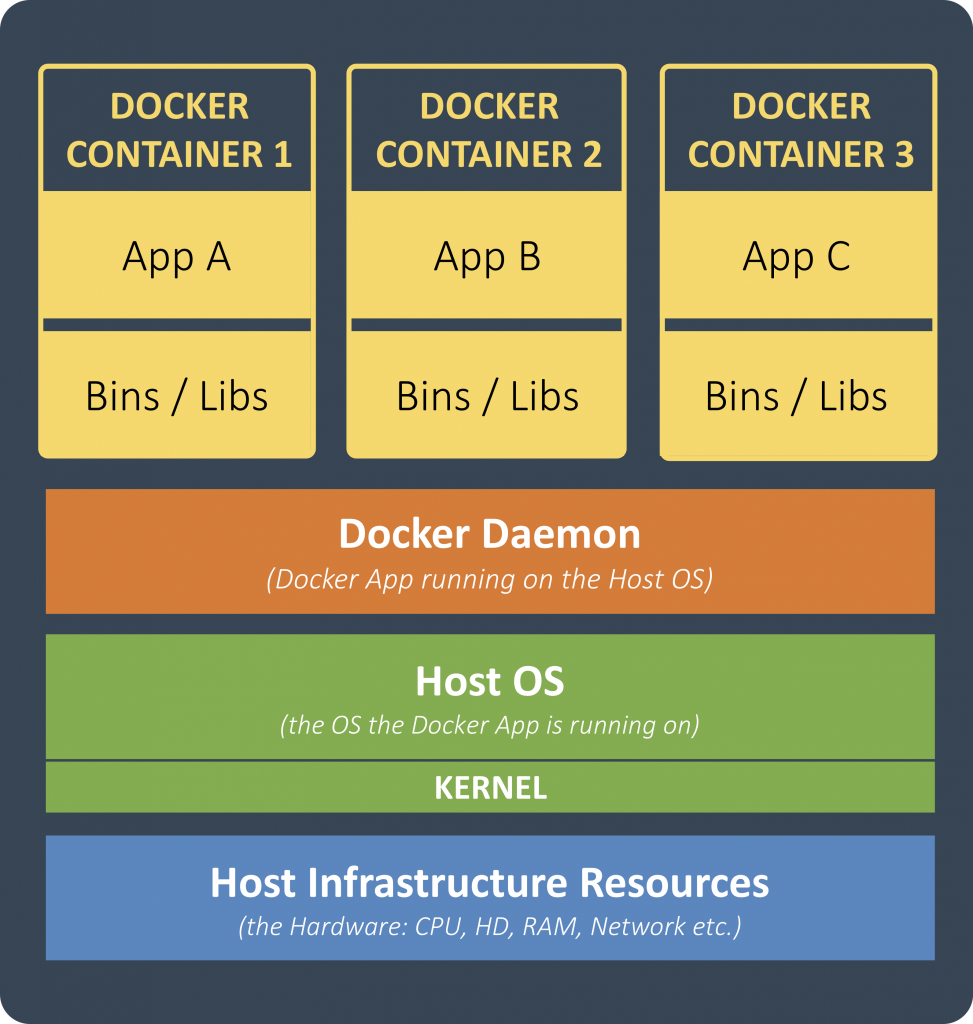

Docker Images

Docker runs an instance of an image in a so-called container. These images contain an application (such as nginx, MySQL, Jenkins, or OS such as Ubuntu) as well as the ecosystem to run this application. The ecosystem consists of the libraries and binaries that are needed to execute the application on a Linux machine. Most of the images are based on Linux distributions, as they already come with the necessary libraries and binaries. However, applications do not need a fully fledged Linux distribution, such as Ubuntu. Hence, most images are based on of a light-weight distribution such as Alpine Linux and others.

Docker Image It should be recalled, that only the libraries and binaries of the Linux distribution inside of the image are necessary for the application to run. These libraries communicate with the Operating System on the Docker Host, mostly the kernel that in turn communicates with hardware. So the Linux distribution inside of your containers does not have to be booted. This makes the start-up time of the container incredibly fast, compared to Virtual Machines, that run an operating system (that has to be booted first).

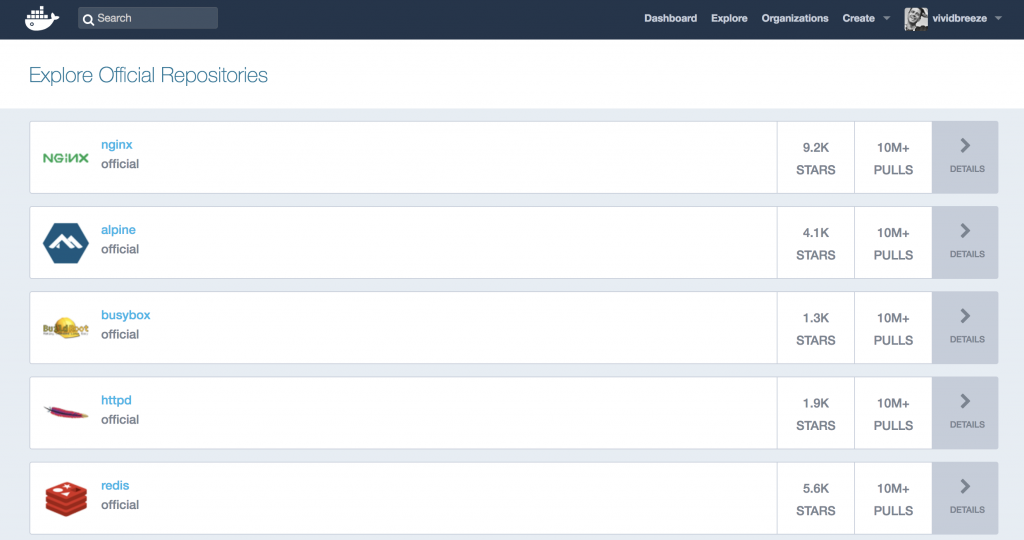

Image Registry

DockerHub is a place where you can find the images that are publicly available. Some of these images are official images, maintained by Docker, most of them are customised images, provided by the community (other developers or organisations who decided to make their images public). You can now (also) find these images in the new DockerStore.

DockerHub Docker Containers

When you start a container you basically run an image. If Docker can’t find the image on your host, docker will download it from a registry (which is DockerHub as a default). On your host, you can see this image running as one or more processes.

Docker Container The beauty of containers is, that you can run and configure an application, without touching your host system.

Prerequisites

You should have Docker (community edition) installed on the machine you are working with.

Basic Commands

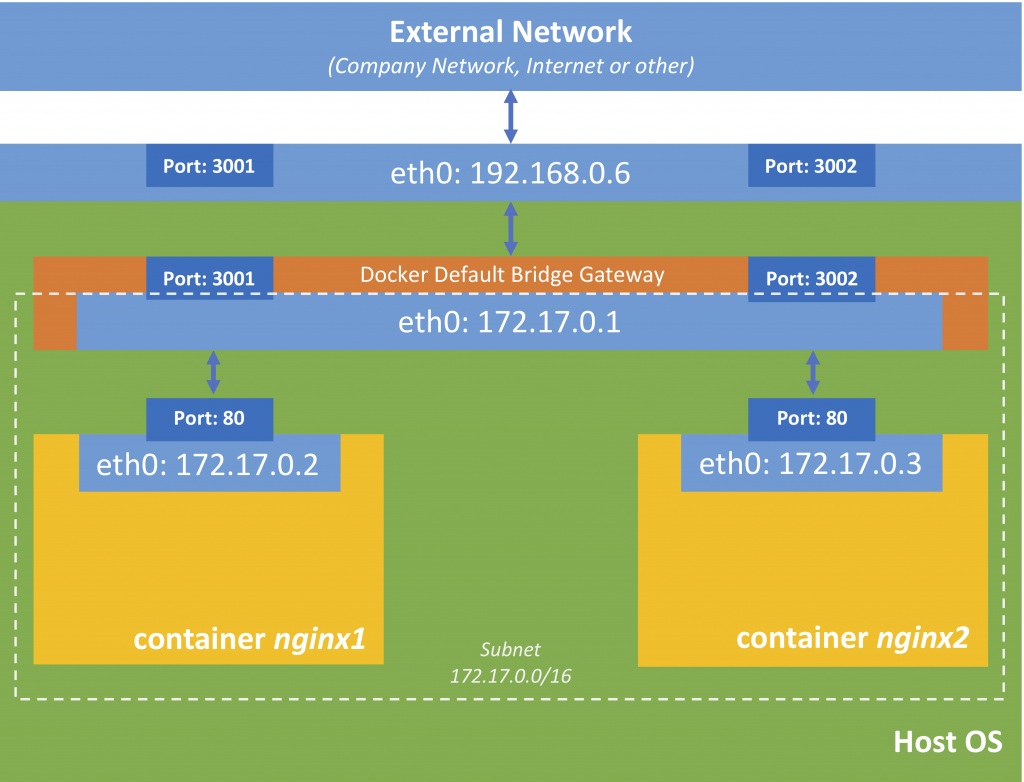

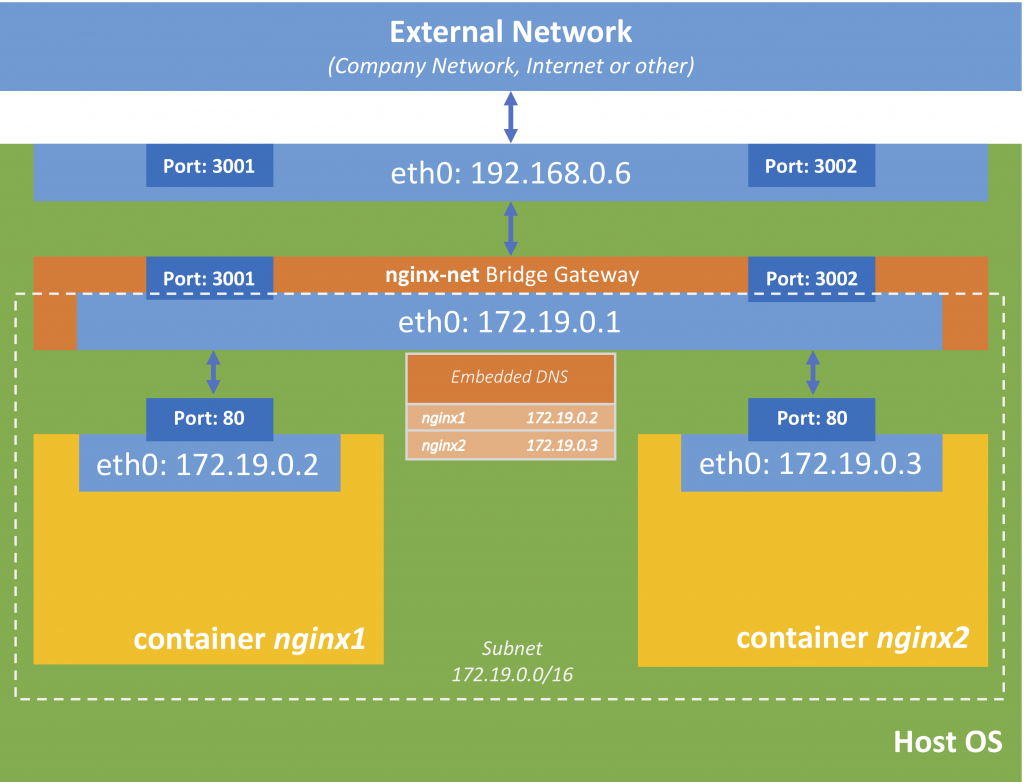

Let us get started. In this introduction, we will run images that are available on DockerHub. In the next part, we will focus on custom-images. So let us build a container that contains a nginx web server.

docker run -d --name webserver -p 3001:80 nginxWhat we are doing here is

- running the Docker image nginx (the latest version, as we didn’t specify a tag)

- mapping the port 80 (default port of nginx) to the port 3001 on the local host

- running the Docker container detached (the container runs in the background)

- naming the container webserver (so we can reference the container by its name for later operations)

If you are running Docker on a Linux OS, you should see two nginx processes in your process list. As mentioned above, if you are running Docker on MacOSX or Windows, Docker runs in a Virtual Machine. You can see the nginx process only when you log into this machine.

screen ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/ttyor

docker run -it --privileged --pid=host debian nsenter -t 1 -m -u -n -i shps -aux | grep nginxshould then show the following two processes running (nginx master process and a nginx worker process)10259 root 0:00 nginx: master process nginx -g daemon off; 10294 101 0:00 nginx: worker process 10679 root 0:00 grep nginx

When you call

localhost:3001in your web browser, you should see the nginx default page.You can list containers by using (use

-ato list all containers, running and stopped ones)docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 590058dc3101 nginx "nginx -g 'daemon of…" 22 minutes ago Up 22 minutes 0.0.0.0:3001->80/tcp webserver

You can now stop the container, run it again or even delete the container with

docker container stop webserverdocker container start webserverdocker container rm webserver(if the container is still running, use-ffor force as an option)You can verify the current state with

docker container ls -a.Bind Mounts

So far we can only see the default nginx welcome screen in our web browser. If we want to show our own content, we can use a so-called bind mount. A bind mount maps a file or directory on the host machine to a file or directory in a container.

The nginx image we are using uses

/usr/share/nginx/htmlas the default home for its HTML-files. We will bind this directory to the directory~/docker/htmlon our local host (you might have to delete the current webserver-container before).docker run -d --name webserver -p 3001:80 -v ~/docker/html:/usr/share/nginx/html nginxSo if you write a simple HTML-file (or any other file) to

~/docker/html, you are able to access it in the web browser immediately.Bind mounts are file-system specific, e.g. MacOSX uses a different file system them Windows, so the location pointer might look differently, such as

/Users/chrison MacOSX and//c/Users/chrison Windows.Volumes

Imagine you need a MySQL database for a short test. You can have MySQL up and running in Docker in seconds.

docker run -d --name database mysqlYou might notice, that MySQL is strangely not running. Let us have a why this might be

docker container logs databaseerror: database is uninitialized and password option is not specified You need to specify one of MYSQL_ROOT_PASSWORD, MYSQL_ALLOW_EMPTY_PASSWORD and MYSQL_RANDOM_ROOT_PASSWORD

As we could have seen in the official image description of MySQL, we needed to specify an environment variable that defines our initial password policy (mental note: always read the image description before you use it). Let us try this again (you might need to delete the container before:

docker container rm database)docker run -d -e MYSQL_ALLOW_EMPTY_PASSWORD=true --name database mysqlThe database content will not be stored inside of the container but on the host as a so-called volume. You can list all volumes by using

docker volume lsDRIVER VOLUME NAME local 2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7

You can find out to which location on the Docker host this volume is mapped by using the volume name

docker volume inspect 2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7[ { "CreatedAt": "2018-08-13T10:41:47Z", "Driver": "local", "Labels": null, "Mountpoint": "/var/lib/docker/volumes/2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7/_data", "Name": "2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7", "Options": null, "Scope": "local" } ]Another way would be to inspect the database container with

docker container inspect databaseIn the REST response, you will find something like this

"Mounts": [ { "Type": "volume", "Name": "2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7", "Source": "/var/lib/docker/volumes/2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7/_data", "Destination": "/var/lib/mysql", "Driver": "local", "Mode": "", "RW": true, "Propagation": "" } ],When MySQL writes into or reads from

/var/lib/mysql, it actually writes into and read from the volume above. If you run Docker on a Linux host, you can go to this directory/var/lib/docker/volumes/2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7/_data. If you run Docker on MacOSX or Windows, you have to log into the Linux VM that runs the Docker daemon first (see above).This volume will outlive the container, meaning, if you remove the container, the volume will still be there – which is good in case you need to back up the contents.

docker container rm -f databasedocker volume lsDRIVER VOLUME NAME local 2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7

However, it can also leave lots of garbage on your computer, so make sure you delete volumes if you don’t use them. In addition, many containers create volumes but it is exhausting to find out which container a volume belongs to.

Another problem that remains is, that if you run a new MySQL container it will create a new volume. Of course, this makes sense, but what happens, if you just want to update to a new version of MySQL?

Same as bindings above, you can name volumes

docker run -d -e MYSQL_ALLOW_EMPTY_PASSWORD=true -v db1:/var/lib/mysql --name database mysqlSo when you now look at your volumes now, you will see the new volume with the name db1

docker volume lsDRIVER VOLUME NAME local 2362207fdc3f4a128ccc37aa45e729d5f0b939ffd38e8068346fcdddcd89fee7 local db1

Connect to this database, e.g. using MySQL Workbench or any other database client, create a database, a table, and insert some data into this table.

(Another option would be to log into the container itself and start a

mysqlsession to create a database:docker container exec -it database bash. This command executesbashin the container in an interactive terminal).Now delete the container database

docker container rm -f databaseYou will notice (

docker volume ls) that our volume is still there. Now create a new MySQL container with the identical volumedocker run -d -e MYSQL_ALLOW_EMPTY_PASSWORD=true -v db1:/var/lib/mysql --name database mysqlUse your database client to connect to MySQL. The database, table and its contents should still be there.

What is the different between a Volume and a Bind Mount

- a Volume is created by using the Docker file system, which is the same, not matter on which OS the Docker daemon is running. A volume can by anonymous (just referenced by an id) or named.

- a Bind Mount, on the other hand, binds a file or directory on the Docker host (which can be Linux, MacOSX or Windows) to a file or directory in the container. Bind Mounts rely on the specific directory structure of the filesystem on the host machine (which is different in Linux, MacOSX and Unix). Hence, Docker doesn’t allow to use bind mounts to create new images. You can only specify bind mounts in the command using the

-vparameter.

Further Remarks

This short tutorial just covered some basics to get around with Docker. I recommend to play a bit with the Docker CLI and also try the different options. In the next part, we will build and run a customised image.

-

How to use Ansible Roles

In the Ansible introduction, we build a Playbook to install Jenkins from scratch on a remote machine. The Playbook was simple (for education purposes), it was designed for Debian Linux distributions (we used apt as a package manager), it just provided an out-of-the-box Jenkins (without any customisation). Thus, the Playbook is not very re-usable or configurable.

However, if we would have made it more complex, the single-file Playbook would have become difficult to read and maintain and thus more error-prone.

To overcome these problems, Ansible specified a directory structure where variables, handlers, tasks and more are put into different directories. This grouped content is called an Ansible Role. A Role must include at least one of these directories.

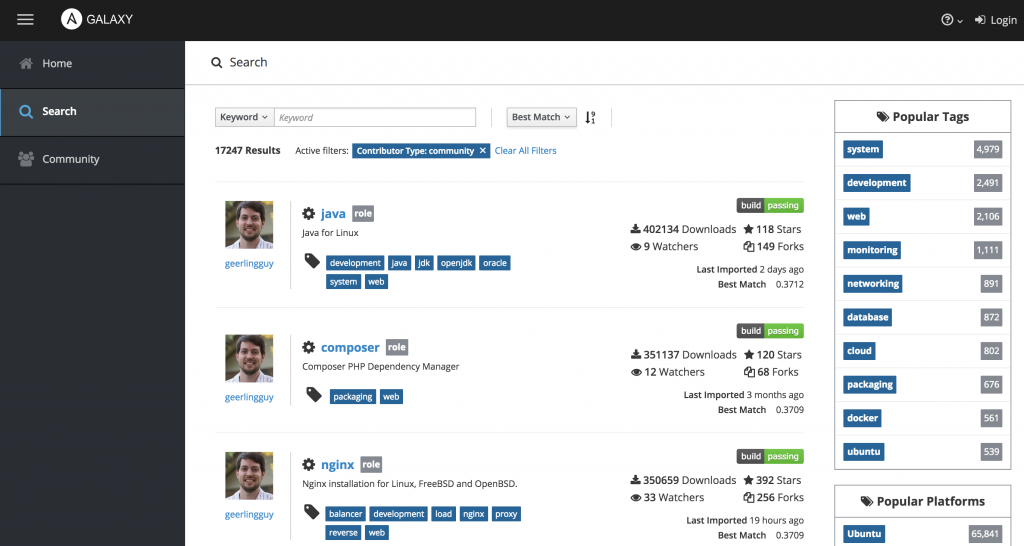

You can find plenty of these Roles design by the Ansible community in the Ansible Galaxy. Let me show some examples to explain the concept of Ansible Roles.

How to use Roles

I highly recommend using popular Ansible Roles to perform provisioning of common software than writing your own Playbooks, as it might be more versatile and less error-prone, e.g. to install Java, Apache or nginx, Jenkins and other.

Ansible Galaxy Let us assume we want to install an Apache HTTP server. The most popular Role with over 300.000 downloads was written by geerlingguy, one of the most popular contributors to the Ansible Galaxy. I will jump right into the GitHub Repository of this Role.

Firstly, you always have to install the role using the command line,

ansible-galaxy install [role-name]e.g.ansible-galaxy install geerlingguy.apacheRoles are downloaded from the Ansible Galaxy and stored locally at

~/.ansible/rolesor/etc/ansible/roles/(useansible --versionif you are not sure).In most cases, the

README.mdprovides sufficient information on how to use this role. Basically, you define the hosts, on which the play is being executed, the variables to configure the role, and the role itself. In case of the Apache Role, the Playbook (ansible.yml) might look like this- name: Install Apache hosts: web1 become: yes become_method: sudo vars: apache_listen_port: 8082 roles: - role: geerlingguy.apacheweb1is a server defined in the Ansible inventory, I set-up with Vagrant before. Now you can run your Ansible Playbook withansible-playbook apache.ymlIn a matter of seconds, Apache should become available at

http://[web1]:8082(in my case:http://172.28.128.3:8082/).Since Ansible 2.4 you can also include Roles as simple tasks. The example above would look like this

- name: Install Apache hosts: web1 become: yes become_method: sudo tasks: - include_role: name: geerlingguy.apache vars: apache_listen_port: 8082A short Look inside a Role

I won’t go too much into how to design Roles, as the Ansible documentation already provides a good documentation. Roles must include at least one of the directories, mentioned in the documentation. In reality, they look less complex, e.g. the Apache Ansible Role we used in this example, looks like

Directory Structure of Ansible Role to install Apache The default-variables including a documentation are defined in defaults/main.yml, the tasks to install Apache can be found in /tasks/main.yml, that in turn calls the OS-specific Playbooks for RedHat, Suse, Solaris and Debian.

Further Remarks

Ansible Roles give good examples of how to use Ansible Playbooks in general. So if you are new to Ansible and want to understand some of the concepts in more detail, I recommend having a look at the Playbooks in the Ansible Galaxy.

As mentioned above, I highly recommend using pre-defined roles for installing common software packages. In most cases, it will give you fewer headaches, especially if these Roles are sufficiently tested, which can be assumed for the most popular ones.