As we have seen in the tutorial about Docker swarm, the IP addresses of our services can change, e.g. every time we deploy new services to a swarm or containers are restarted or newly created by the swarm-manager etc. So services better address other services by using their name instead of their IP-address.

Default Overlay Network

As we have seen in the Docker Swarm Tutorial, an application can span several nodes (physical and virtual machines) that contain services that communicate with each other. To allow services to communicate with each other, Docker provides so-called overlay networks.

Please set-up the example from Docker Swarm Tutorial before we deploy our service:

docker stack deploy -c docker-compose.yml dataapp

Creating network dataapp_default Creating service dataapp_web

Now let us show all the networks with the scope on a swarm

docker network ls

NETWORK ID NAME DRIVER SCOPE 515f5972c61a bridge bridge local uvbm629gioga dataapp_default overlay swarm a40cd6f03e65 docker_gwbridge bridge local c789c6be4aca host host local p5a03bvnf92t ingress overlay swarm efe5622e25bf none null local

If a container joins a swarm, two networks are created on the host the container runs on

- ingress network, as the default overlay-network. This network will route requests for services to a task for that service. You can find more about ingress filtering on wikipedia.

- docker_gwbrige, which connects stand-alone Docker containers (like the two alpine containers we created earlier) to containers inside of a swarm.

You can specify a user-defined overlay network in the docker-compose.yml. We haven’t done this so far, so Docker creates one for use with the name of the service and an extension, in this case, dataservice_default.

Let us verify which containers belong to our network dataservice_default.

docker-machine ssh vm-manager docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 99e248ad51bc vividbreeze/docker-tutorial:version1 "java DataService" 19 minutes ago Up 19 minutes dataapp_dataservice.1.osci7tq58ag6o444ymldv6usm f12dea1973da vividbreeze/docker-tutorial:version1 "java DataService" 19 minutes ago Up 19 minutes dataapp_dataservice.3.2m6674gdu8mndivpio008ylf5

We see that two containers of our service are running on the vm-manager. Let us now have a look at the dataservice_default network on the vm-manager

docker-machine ssh vm-manager docker network inspect dataapp_default

In the Containers-section of the REST response, you will see three containers that belong to the dataservice_default network, the two containers above, as well as a default-endpoint. You can verify vm-worker1 on your own.

User-Defined Overlay Network

So far our application consists of only one service. Let us add another service to our application (a database). In addition, we define our own network.

version: "3"

services:

dataservice:

image: vividbreeze/docker-tutorial:version1

deploy:

replicas: 5

restart_policy:

condition: on-failure

ports:

- "4000:8080"

networks:

- data-network

redis:

image: redis

ports:

- "6379:6379"

deploy:

placement:

constraints: [node.role == manager]

networks:

- data-network

networks:

data-network:

We added a database service (a Redis in-memory data storage service). In addition, we defined a network (data-network) and added both services to this network. You will also see a constraint for the Redis service, which defines that it should only run on the vm-manager. Now let us deploy our altered service

docker stack deploy -c docker-compose.yml dataservice

Creating network dataapp_data-network Creating service dataapp_dataservice Creating service dataapp_redis

As expected, the new network (dataapp_data-network) and the new service (dataapp_redis) were created, our existing service was updated.

docker network ls -f "scope=swarm"

NETWORK ID NAME DRIVER SCOPE dobw7ifw63fo dataapp_data-network overlay swarm uvbm629gioga dataapp_default overlay swarm p5a03bvnf92t ingress overlay swarm

The network is listed in our list of available networks (notice that the dataapp_default network still exists. As we don’t need it anymore and can delete it with

docker network rm dataservice_default

Let us now log into our containers and see if we could ping the other containers using their name. First get a list of containers on vm-worker1 (the container ids are sufficient here)

docker-machine ssh vm-worker1 docker container ls -q

cf7fd10d88be 9ea79f754419 6cd939350f74

Now let us execute a ping to the service dataservice and to the redisservice that runs on the vm-manager from one of these containers.

docker-machine ssh vm-worker1 docker container exec cf7fd10d88be ping dataservice

docker-machine ssh vm-worker1 docker container exec cf7fd10d88be ping redis

In both cases, the services should be reached

PING web (10.0.2.6) 56(84) bytes of data. 64 bytes from 10.0.2.6: icmp_seq=1 ttl=64 time=0.076 ms 64 bytes from 10.0.2.6: icmp_seq=2 ttl=64 time=0.064 ms PING redis (10.0.2.4) 56(84) bytes of data. 64 bytes from 10.0.2.4: icmp_seq=1 ttl=64 time=0.082 ms 64 bytes from 10.0.2.4: icmp_seq=2 ttl=64 time=0.070 ms

Routing Mesh

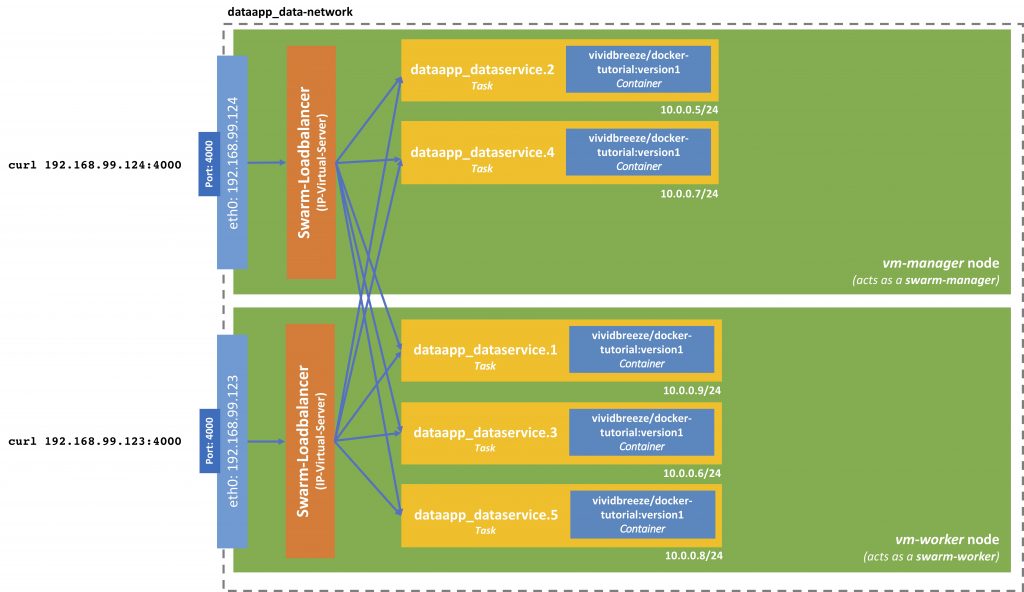

The nodes in our swarm now the IP-address 192.168.99.124 (vm-manager) and 192.168.99.123 (vm-worker1)

docker-machine ip vm-manager

docker-machine ip vm-worker1

We can reach our webservice from both(!) machines

curl 192.168.99.124:4000

curl 192.168.99.123:4000

It doesn’t matter if the machine is a manager-node or a worker-mode. Why is this so?

Earlier, I mentioned the so-called ingress network or ingress filtering. This network receives a service request and routes it to the corresponding task. The load-balancing is present on each node and uses the IP virtual server from the Linux kernel. This load-balancer is stateless and routes packages on the TCP-Layer.

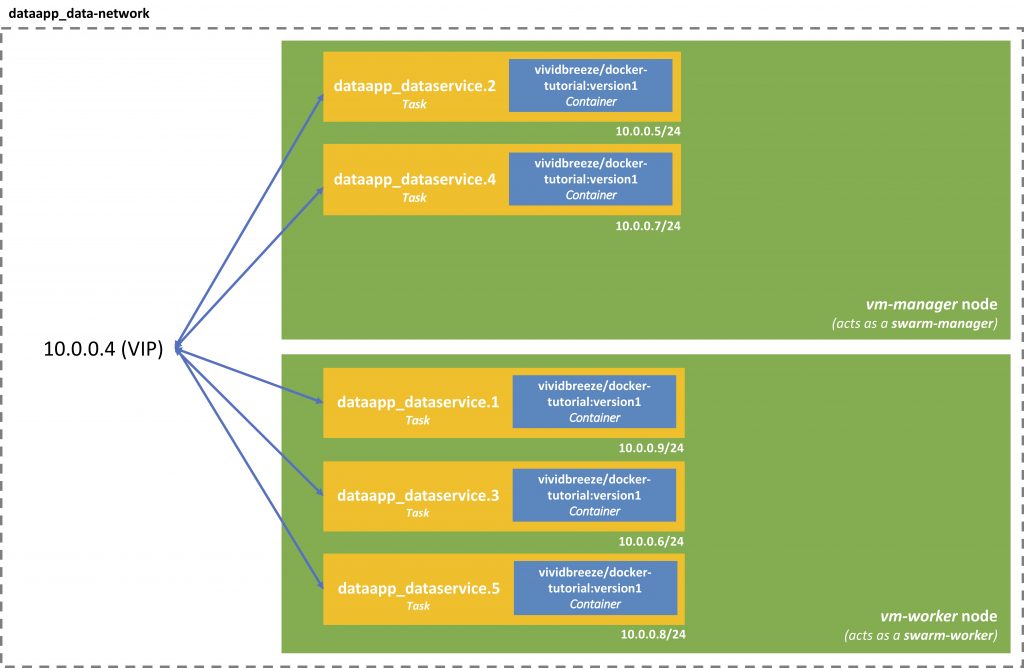

Internally the containers communicate via a virtual IP. The beauty here is that if one container/task crashes, the other containers won’t notice, as they communicate with each other via the Virtual IP.

You can find this virtual IP by using

docker service inspect dataapp_dataservice

Further Remarks

Of course, every container can also connect to the outside world. In inherits the DNS settings from the Docker daemon (/etc/hosts and /etc/resolv.conf).

The name service provided by Docker only works within the Docker space, e.g. within a Docker swarm, between containers. From outside of a container, you can’t reference the services by their name. Often this isn’t necessary and even not desired for security reasons (like the Redis datastore in our example). Here you might want to have a closer look at Zookeeper or Consul or similar tools.

When you use docker-compose a bridge network will be created, as docker-compose won’t work for a swarm.

However, if you have applications running with many services running, you might consider Docker management tools, such as Portainer, Docker ToolBox, or Dockstation.