Default Bridge Network

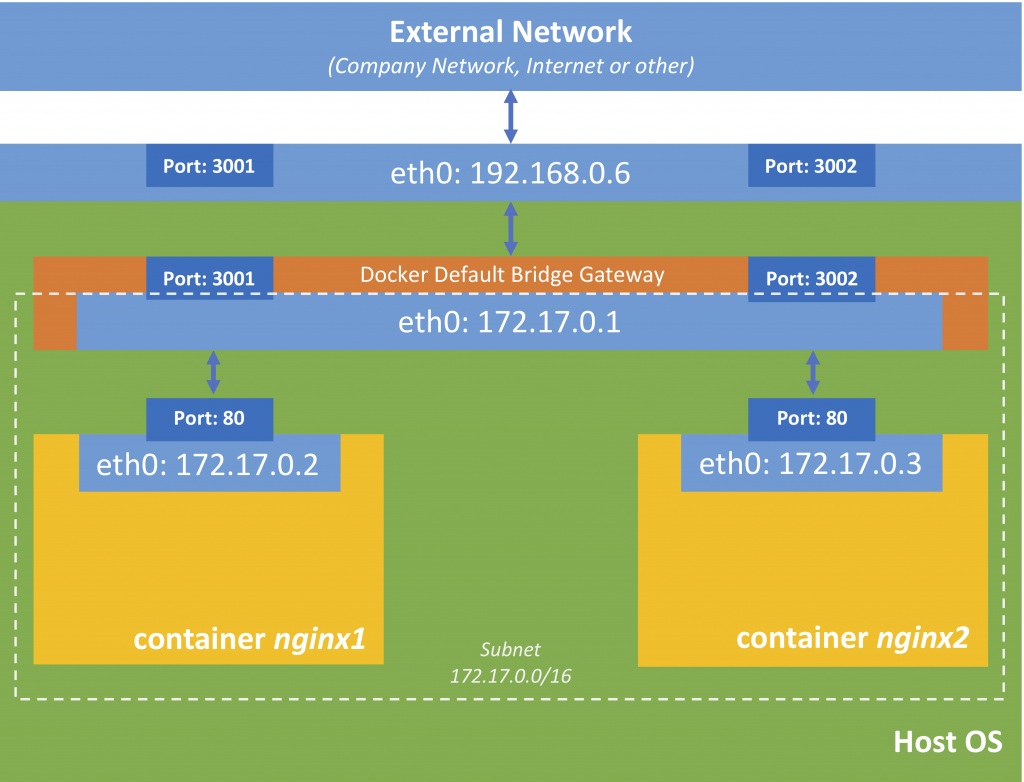

Containers, that run on the same machine (share the same Docker daemon) and are not part of a swarm use the so-called bridge network (a virtual network) to communicate with each other.

Let us create two basic containers

docker container run -d --name nginx2 -p3001:80 nginx:alpine

docker container run -d --name nginx2 -p3002:80 nginx:alpine

You can inspect the bridge-network with

docker network inspect bridge

and you will see in the container section of this REST response, that the newly created docker-containers belong to this network.

"Containers": {

"9b4e3e3ca279518708ed88a629ba5d40bd1e94d5ed316b74b026b12c1034b497": {

"Name": "nginx2",

"EndpointID": "5a65daa2976ff986e2a9b4aeb9df8e990b0bf0a45ac60dd05475af20f15cc5c9",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"c57ee29c8babf8ecf20e5c2a9a66b30c49cc6bb94b22cd4daad79b87f7a33358": {

"Name": "nginx1",

"EndpointID": "75dc77f5a8677485aaee91c33734e720ebbb61b94917eacba8f50bd4dc7a88e3",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

You also see the IP address of container nginx1 (172.17.0.2) and nginx2 (127.17.0.3). We can also see the IP address an subnet of the bridge:

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

Now let us see if the nginx-containers can see each other, as well as the bridge.

docker container exec -it nginx1 ping 172.17.0.3

docker container exec -it nginx1 ping 172.17.0.1

docker container exec -it nginx2 ping 172.17.0.2

docker container exec -it nginx2 ping 172.17.0.1

In each case, you should be able to reach the destination

PING 172.17.0.1 (172.17.0.1): 56 data bytes 64 bytes from 172.17.0.1: seq=0 ttl=64 time=0.092 ms 64 bytes from 172.17.0.1: seq=1 ttl=64 time=0.167 ms 64 bytes from 172.17.0.1: seq=2 ttl=64 time=0.126 ms

However, what is not working with the default bridge network is name resolution, e.g.

docker container exec -it nginx1 ping nginx2

should not work and lead to a

ping: bad address 'nginx2'

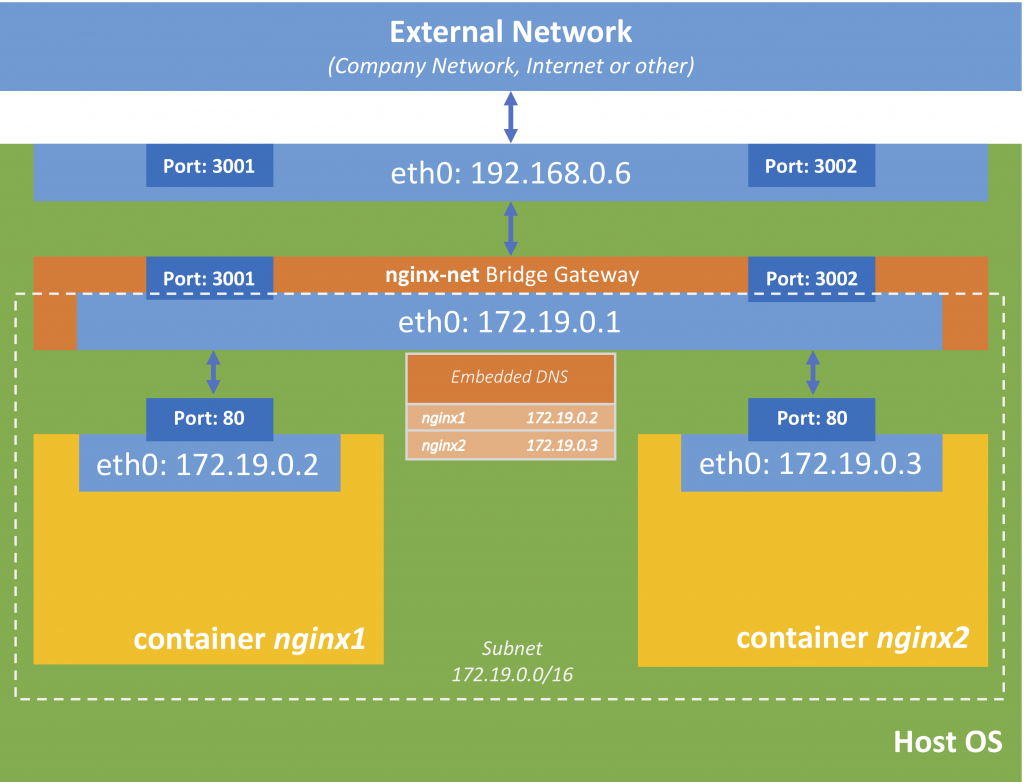

Custom Bridge Networks (embedded DNS)

So to make this work, we have to create our own network

docker network create --driver bridge nginx-net

You can see the new network (and other networks) by using

docker network ls

NETWORK ID NAME DRIVER SCOPE 1f3e6ded4f9a bridge bridge local 20fe2ddb80e6 host host local 6d96ed99b06b nginx-net bridge local 5866618435c1 none null local

Now let us connect our two containers to this network

docker network connect nginx-net nginx1

docker network connect nginx-net nginx2

If you now do a docker network inspect nginx-net you will see that both containers are connected to this network. You will notice, that the IP-addresses of these containers in this network have changed (the subnet is 172.19.0.0/16 compared to 172.17.0.0/16 as in the default-bridge network).

"Containers": {

"a1ce9d1f383cf10b3a0cd27d6ef482719ae4e3ae48cb5b8402b2d3d8b897db03": {

"Name": "nginx2",

"EndpointID": "49fc6d33e57e909f0f0ea98e00c8d8f2f2db996d001a3359b1a6dcc968254f91",

"MacAddress": "02:42:ac:13:00:03",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": ""

},

"c57ee29c8babf8ecf20e5c2a9a66b30c49cc6bb94b22cd4daad79b87f7a33358": {

"Name": "nginx1",

"EndpointID": "fb7e9c28e6a5564e9bba182ada2ce8048d9ab5b8ec3620f7e03a2491b18219b2",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

}

},

Now let run a ping against the other containers by name again

docker container exec -it nginx1 ping nginx2

PING nginx2 (172.19.0.3): 56 data bytes 64 bytes from 172.19.0.3: seq=0 ttl=64 time=0.139 ms 64 bytes from 172.19.0.3: seq=1 ttl=64 time=0.152 ms c64 bytes from 172.19.0.3: seq=2 ttl=64 time=0.100 ms

The same should work for the other connections. So basically, the name of the container is its host-name.

So why is DNS inside of Docker networks so important? As we have especially seen in the tutorials about Docker Swarm, you can’t rely on the IP address of containers, as they may change, especially when a swarm manager has control over the containers. Hence it is much reliable and easier to reference containers by name than their IP address.

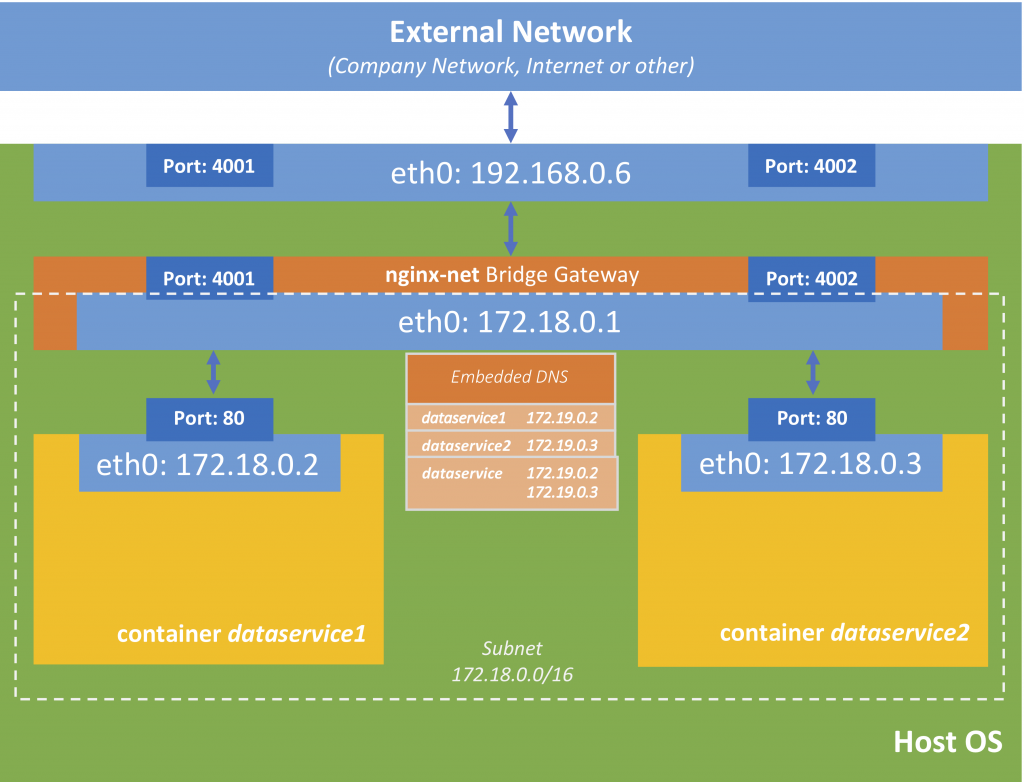

Round-Robin DNS

Let us take our dataService from the Swarm tutorial and create two Docker containers on the same host

docker container run --name dataservice1 -d -p4001:8080 vividbreeze/docker-tutorial:version1

docker container run --name dataservice2 -d -p4002:8080 vividbreeze/docker-tutorial:version1

So you call these services you should get a response with an id. This id should be different, depending on the port.

curl 127.0.0.1:4001

<?xml version="1.0" ?><result><name>hello</name><id>2128</id></result>

curl 127.0.0.1:4002

<?xml version="1.0" ?><result><name>hello</name><id>1976</id></result>

Now let us create a custom bridge-network and assign both containers to this network.

docker network create --driver bridge dataservice-net

docker network connect dataservice-net --alias dataservice dataservice1

docker network connect dataservice-net --alias dataservice dataservice2

We used a new parameter called --alias. This alias is an alias for the embedded DNS service, i.e. that when I call dataservice inside of the dataservice-net, the embedded DNS will return the IP address from either dataservice1 or dataservice2. Let us test this by running a new container inside this network and executing curl dataservice:8080 (because inside of dataservice-net both containers listen to port 8080 on different IP-addresses).

docker container run --rm -it --network dataservice-net centos curl dataservice:8080

This command runs a new Docker container with CentOS, executes a curl (-it) and deletes itself afterwards (–rm).

If you repeat this command a couple of time, you see that the either one of the containers returns a result (you can see this on the different ids they return).

$ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>2128</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>2128</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>2128</id></result>

What is the beauty here? The loads (requests) is balanced between the two containers. If you stop one of the containers, a request to dataservice will still return a result.

docker container stop dataservice1

docker container run --rm -it --network dataservice-net centos curl dataservice:8080

$ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result> $ docker container run --rm -it --network dataservice-net centos curl dataservice:8080 <?xml version="1.0" ?><result><name>hello</name><id>1976</id></result>

We will later discuss, how we can use this DNS balancing from outside of the network.

Further Remarks

A bridge network can be reached by its IP address from the Docker host, when you are working on Linux (or from inside the Docker VM if you are working on MacOSX or Windows), but not by its name. The way you can access the containers from the host (also MacOSX and Windows) is by its ports on the local machine, e.g. curl 127.0.0.1:3000 or curl 192.168.0.6:3001.

If you are not exposing a port when running the container, the container won’t be accessible from outside of the bridge network (which is fine). This makes sense if you e.g have a service that connects to a database. The service should be accessible from the host network, while the database should only be accessed by the service.

A container can belong to several networks. You can try this by creating more of our small containers and connect them to our nginx-net or newly created networks.