The aim of this tutorial is to provision Artifactory stack in Docker on a Virtual Machine using Ansible for the provisioning. I separated the different concerns so that they can be tested, changed and run separately. Hence, I recommend you run the parts separately, as I explain them here, in case you run into some problems.

Prerequisites

On my machine, I created a directory artifactory-install. I will later push this folder to a git repository. The directory structure inside of this folder will look like this.

artifactory-install ├── Vagrantfile ├── artifactory │ ├── docker-compose.yml │ └── nginx-config │ ├── reverse_proxy_ssl.conf │ ├── cert.pem │ └── key.pem ├── artifactory.yml ├── docker-compose.yml └── docker.yml

Please create the subfolders artifactory (the folder that we will copy to our VM) and nginx-config subfolder (which contains the nginx-configuration for the reverse-proxy as well as the certificate and key).

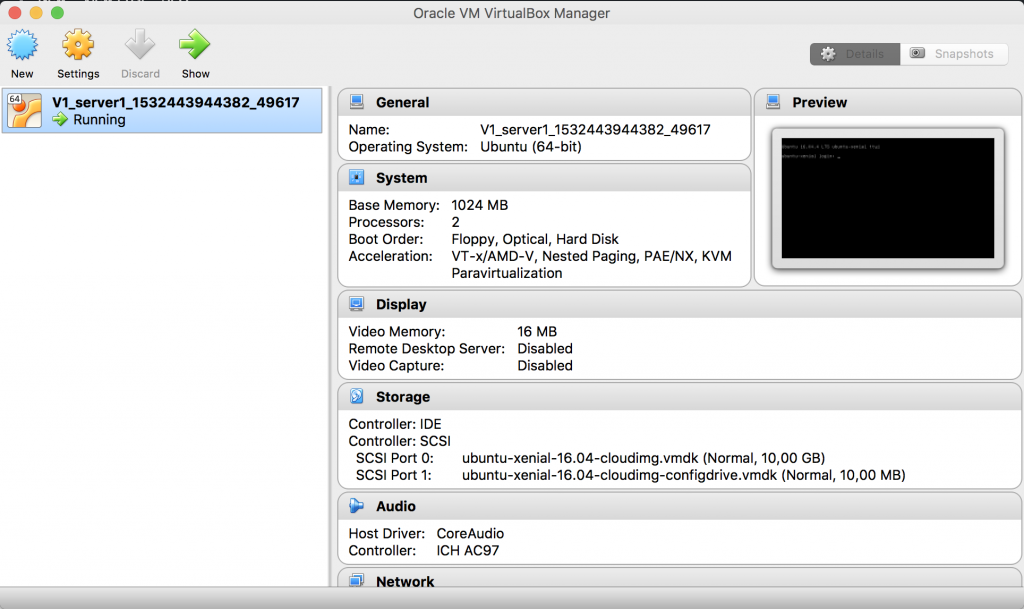

Installing a Virtual Machine with Vagrant

I use the following Vagrantfile. The details are explained in Vagrant in a Nutshell. You might want to experiment with the virtual box parameters.

agrant.configure("2") do |config|

config.vm.define "artifactory" do |web|

# Resources for this machine

web.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = "1"

end

web.vm.box = "ubuntu/xenial64"

web.vm.hostname = "artifactory"

# Define public network. If not present, Vagrant will ask.

web.vm.network "public_network", bridge: "en0: Wi-Fi (AirPort)"

# Disable vagrant ssh and log into machine by ssh

web.vm.provision "file", source: "~/.ssh/id_rsa.pub", destination: "~/.ssh/authorized_keys"

# Install Python to be able to provision machine using Ansible

web.vm.provision "shell", inline: "which python || sudo apt -y install python"

end

end

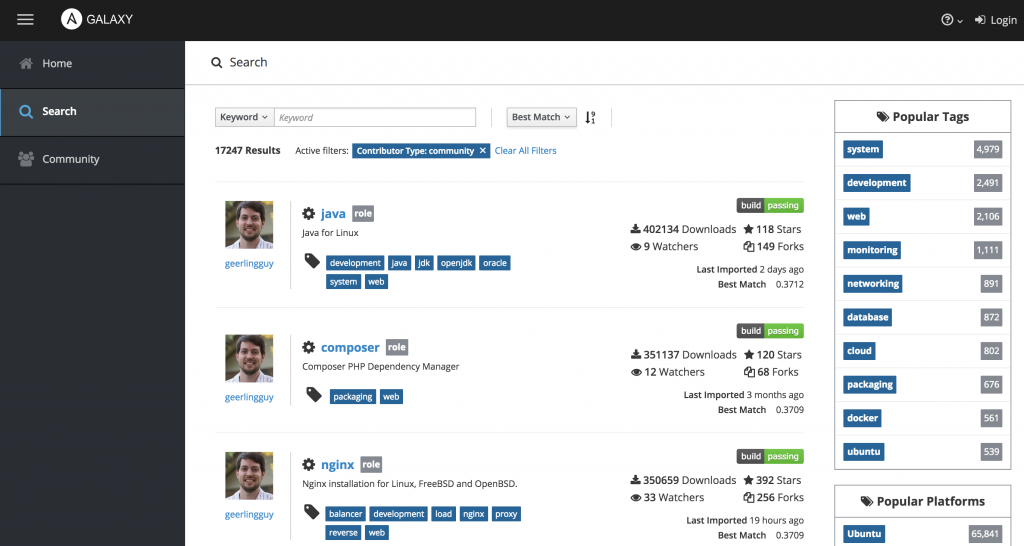

Installing Docker

As Artifactory will run as a Docker container, we have to install the docker environment first. In my Playbook (docker.yml), I use the Ansible Role to install Docker and docker-compose from Jeff Geerling. The role variables are explained in the README.md. You might have to adopt this yaml-file, e.g. defining different users etc.

- name: Install Docker

hosts: artifactory

become: yes

become_method: sudo

tasks:

- name: Install Docker and docker-compose

include_role:

name: geerlingguy.docker

vars:

- docker_edition: 'ce'

- docker_package_state: present

- docker_install_compose: true

- docker_compose_version: "1.22.0"

- docker_users:

- vagrant

Before you run this playbook you have to install the Ansible role

ansible-galaxy install geerlingguy.docker

In addition, make sure you have added the IP-address of the VM to your Ansible inventory

sudo vi /etc/ansible/hosts

Then you can run this Docker Playbook with

ansible-playbook docker.yml

Installing Artifactory

I will show the Artifactory Playbook (artifactory.yml) first and then we go through the different steps.

- name: Install Docker

hosts: artifactory

become: yes

become_method: sudo

tasks:

- name: Check is artifactory folder exists

stat:

path: artifactory

register: artifactory_home

- name: Clean up docker-compose

command: >

docker-compose down

args:

chdir: ./artifactory/

when: artifactory_home.stat.exists

- name: Delete artifactory working-dir

file:

state: absent

path: artifactory

when: artifactory_home.stat.exists

- name: Copy artifactory working-dir

synchronize:

src: ./artifactory/

dest: artifactory

- name: Generate a Self Signed OpenSSL certificate

command: >

openssl req -subj '/CN=localhost' -x509 -newkey rsa:4096 -nodes

-keyout key.pem -out cert.pem -days 365

args:

chdir: ./artifactory/nginx-config/

- name: Call docker-compose to run artifactory-stake

command: >

docker-compose -f docker-compose.yml up -d

args:

chdir: ./artifactory/

Clean-Up a previous Artifactory Installation

As we will see later, the magic happens in the ~/artifactory folder on the VM. So first we will clean-up a previous installation, e.g. stopping and removing the running containers. There are different ways to achieve this. I will use a docker-compose down, which will terminate without an error, even if no container is running. In addition, I will delete the artifactory-folder with all subfolders (if they are present).

Copy nginx-Configuration and docker-compose.yml

The artifactory-folder includes the docker-compose.yml to install the Artifactory stack (see below) and the nginx-configuration (see below). They will be copied in a directory with the same name to the remote host.

I use the synchronise module to copy the files, as currently since Python 3.6 there seems to be a problem that doesn’t allow to copy a directory recursively with the copy module. Unfortunately, synchronise demands your SSH password again. There are workarounds that make sense but don’t look elegant to me, so I avoid them ;).

Set-Up nginx Configuration

I will use nginx as a reverse-proxy that also allows a secure connection. The configuration-file is static and located in the nginx-config subfolder (reverse_proxy_ssl.conf)

server {

listen 443 ssl;

listen 8080;

ssl_certificate /etc/nginx/conf.d/cert.pem;

ssl_certificate_key /etc/nginx/conf.d/key.pem;

location / {

proxy_pass http://artifactory:8081;

}

}

The configuration is described in the nginx-docs. You might have to adopt this file for your needs.

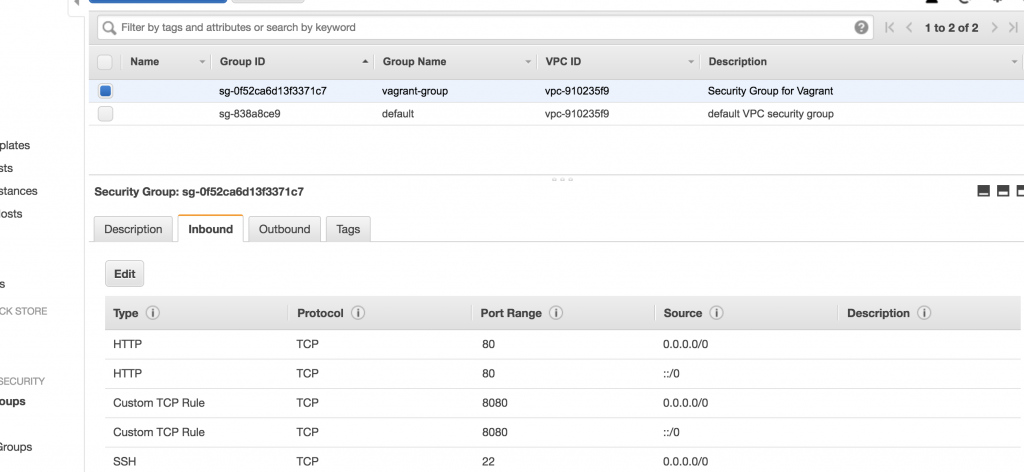

The proxy_pass is set to the service-name inside of the Docker overlay-network (as defined in the docker-compose.yml). I will open port 443 for an SSL connection and 8080 for a non-SSL connection.

Create a Self-Signed Certificate

We will create a self-signed certificate on the remote-host inside of the folder nginx-config

openssl req -subj '/CN=localhost' -x509 -newkey rsa:4096 -nodes- keyout key.pem -out cert.pem -days 365

The certificate and key are referenced in the reverse_proxy_ssl.conf, as explained above. You might run into problems, that your browser won’t accept this certificate. A Google search might provide some relief.

Run Artifactory

As mentioned above, we will run Artifactory with a reverse-proxy and a PostgreSQL as its datastore.

version: "3"

services:

postgresql:

image: postgres:latest

deploy:

replicas: 1

restart_policy:

condition: on-failure

environment:

- POSTGRES_DB=artifactory

- POSTGRES_USER=artifactory

- POSTGRES_PASSWORD=artifactory

volumes:

- ./data/postgresql:/var/lib/postgresql/data

artifactory:

image: docker.bintray.io/jfrog/artifactory-oss:latest

user: "${UID}:${GID}"

deploy:

replicas: 1

restart_policy:

condition: on-failure

environment:

- DB_TYPE=postgresql

- DB_USER=artifactory

- DB_PASSWORD=artifactory

volumes:

- ./data/artifactory:/var/opt/jfrog/artifactory

depends_on:

- postgresql

nginx:

image: nginx:latest

deploy:

replicas: 1

restart_policy:

condition: on-failure

volumes:

- ./nginx-config:/etc/nginx/conf.d

ports:

- "443:443"

- "8080:8080"

depends_on:

- artifactory

- postgresql

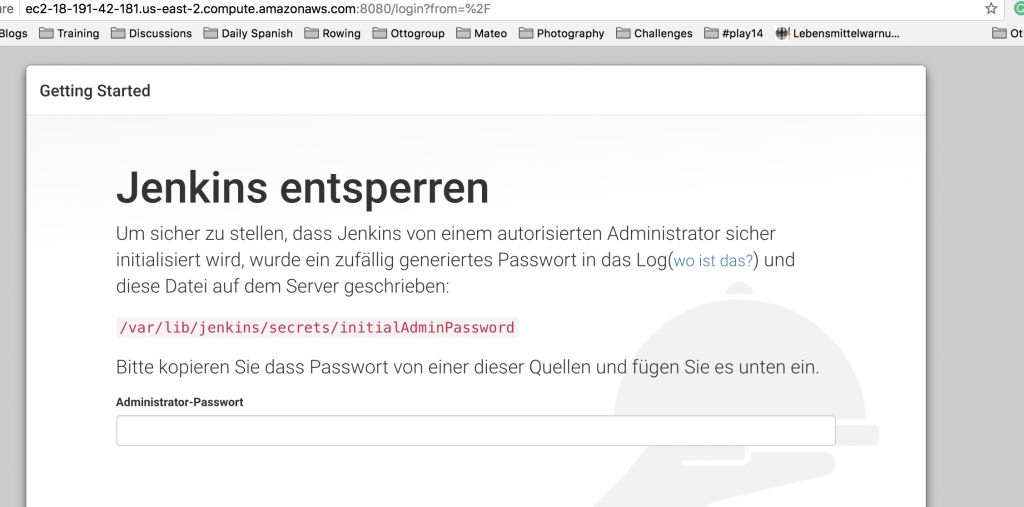

Artifactory

I use the image artifactory-oss:latest from JFrog (as found on JFrog BinTray). On GitHub, you find some examples of how to use the Artifactory Docker image.

I am not super satisfied, as out-of-the-box I receive a „Mounted directory must be writable by user ‚artifactory‘ (id 1030)“ error when I bind /var/opt/jfrog/artifactory inside of the container to the folder ./data/artifactory on the VM. Inside of the Dockerfile for this image, they use a few tasks with a user „artifactory“. I don’t have such a user on my VM (and don’t want to create one). A workaround seems to be to set the user-id and group-id inside of the docker-compose.yml as described here.

Alternatively, you can use the Artifactory Docker image from Matt Grüter provided on DockerHub. However, it doesn’t work with PostgreSQL out-of-the-box and you have to use the internal database of Artifactory (Derby). In addition, the latest image from Matt is built on version 3.9.2 (the current version is 6.2.0, 18/08/2018). Hence, you have to build a new image and upload it to your own repository. Sure if we use docker-compose to deploy our services, we could add a build-segment in the docker-compose.yml. But if we use docker stack to run our services, the build-part will be ignored.

I do not publish a port (default is 8081) as I want users to access Artifactory only by the reverse-proxy.

PostgreSQL

I use the official PostgreSQL Docker image from DockerHub. The data-volume inside of the container will be bound to the postgresql folder in ~/artifactory/data/postgresql on the VM. The credentials have to match the credentials for the artifactory-service. I don’t publish a port, as I don’t want to use the database outside of the Docker container.

The benefits of using a separate database are when you have intensive usage or a high load on your database, as the embedded database (Derby) might then slow things down.

Nginx

I use Nginx as described above. The custom configuration in ~/artifactory/nginx-config/reverse_proxy_ssl.conf is bound to /etc/nginx/conf.d inside of the Docker container. I publish port 443 (SSL) and 8080 (non-SSL) to the world outside of the Docker container.

Summary

To get the whole thing started, you have to

- Create a VM (or have some physical or virtual machine where you want to install Artifactory) with Python (as needed by Ansible)

- Register the VM in the Ansible Inventory (

/etc/ansible/hosts) - Start the Ansible Playbook

docker.ymlto install Docker on the VM (as a prerequisite to run Artifactory) - Start the Ansible Playbook

artifactory.ymlto install Artifactory (plus PostgreSQL and a reverse-proxy).

I recommend adopting the different parts for your needs. I am sure you could also improve a lot. Of course, you can include the Ansible Playbooks (docker.yml and artifactory.yml) directly in the provision-part of your Vagrantfile. In this case, you have to only run vagrant up.

Integrating Artifactory with Maven

This article describes how to configure Maven with Artifactory. In my case, the automatic generation of the settings.xml in ~/.m2/ for Maven didn’t include the encrypted password. You can retrieve the encrypted password, as described here. Make sure you update your Custom Base URL in the General Settings, as it will be used to generate the settings.xml.

Possible Error: Broken Pipe

I ran into an authentification problem when I first tried to deploy a snapshot archive from my project to Artifactory. It appeared when I ran mvn deploy as (use -X parameter for a more verbose output)

Caused by: org.eclipse.aether.transfer.ArtifactTransferException: Could not transfer artifact com.vividbreeze.springboot.rest:demo:jar:0.0.1-20180818.082818-1 from/to central (http://artifactory.intern.vividbreeze.com:8080/artifactory/ext-release-local): Broken pipe (Write failed)

A broken pipe can mean everything, and you will find a lot when you google it. A closer look in the access.log on the VM running Artifactory revealed an

2018-08-18 08:28:19,165 [DENIED LOGIN] for chris/192.168.0.5.

The reason was that I provided a wrong encrypted password (see above) in ~/.m2/settings. You should be aware, that the encrypted password changes everytime you deploy a new version of Artifactory.

Possible Error: Request Entity Too Large

Another error I ran into when I deployed a very large jar (this can happen with Spring Boot apps that carry a lot of luggage): Return code is: 413, ReasonPhrase: Request Entity Too Large.

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-deploy-plugin:2.8.2:deploy (default-deploy) on project demo: Failed to deploy artifacts: Could not transfer artifact com.vividbreeze.springboot.rest:demo:jar:0.0.1-20180819.092431-3 from/to snapshots (http://artifactory.intern.vividbreeze.com:8080/artifactory/libs-snapshot): Failed to transfer file: http://artifactory.intern.vividbreeze.com:8080/artifactory/libs-snapshot/com/vividbreeze/springboot/rest/demo/0.0.1-SNAPSHOT/demo-0.0.1-20180819.092431-3.jar. Return code is: 413, ReasonPhrase: Request Entity Too Large. -> [Help 1]

I wasn’t able to find anything in the Artifactory logs, nor the STDIN/ERR of the nginx-container. However, I assumed that there might a limit on the maximum request body size. As the was over 20M large, I added the following line to the ~/artifactory/nginx-config/reverse_proxy_ssl.conf:

server {

...

client_max_body_size 30M;

...

}

Further Remarks

So basically you have to run three scripts, to run Artifactory on a VM. Of course, you can add the two playbooks to the provision-part of the Vagrantfile. For the sake of better debugging (something will go probably wrong), I recommend running them separately.

The set-up here is for a local or small team installation of Artifactory, as Vagrant and docker-compose are tools made for development. However, I added a deploy-part in the docker-compose.yml, so you can easily set up a swarm and run the docker-compose.yml with docker stack without any problems. Instead of Vagrant, you can use Terraform or Apache Mesos or other tools to build an infrastructure in production.

To further pre-configure Artifactory, you can use the Artifactory REST API or provide custom configuration files in artifactory/data/artifactory/etc/.